Issue #1: Why is Machine Learning so hard?

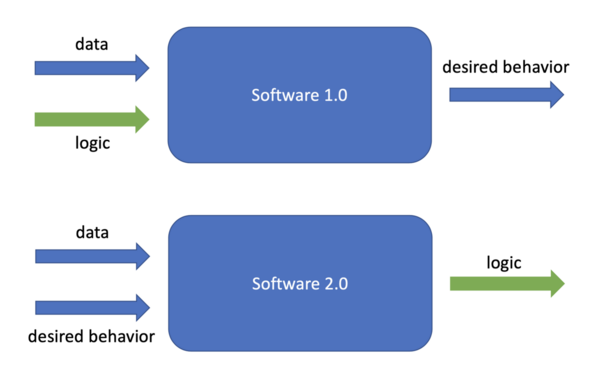

With the advent of machine learning, software development is undergoing a paradigm shift from one where we explicitly write algorithms, to one where we ask the computer to discover them from data. Andrej Karpathy, a senior director at Tesla, calls this shift “Software 2.0”. Despite the success that AI and machine learning have enjoyed so far, we are just beginning to understand what software development looks like in this new world.

Silent failures: Software 2.0 is plagued by “silent failures” – models failing to produce the correct output without any explicit warning. Often such failures can be embarrassing, or worse.

Black box AI: Model parameters are not readily understandable which limits our ability to debug individual failure cases.

Drifts: Data used to train models represents a state at a snapshot in time. But the real world is always changing, which often means that historical data (used for model training) is no longer representative of the present. This “drift” can cause models to regress over time.

What does it mean to develop software in this new paradigm? What new challenges (and opportunities) does this present? With this newsletter, our goal is to explore these questions and share our views on how to write better Software 2.0.

The Worst Technical Debt is of the "Hidden" variety

This is a seminal 2015 paper from Google that talks about the real-world challenges of developing and productionizing Machine Learning models at scale. It made famous the image above, which shows how small a fraction of the overall ML system code is the actual ML code. The paper argues that ML systems can easily incur technical debt, because of all of the maintenance problems of traditional code plus an additional set of ML-specific issues. And who can better speak to these issues than Google?

Three things that resonated most closely with us:

@nihit: Configuration debt: Real-world ML systems often have a wide range of configurations, ranging from training data selection, feature selection, model architecture, hyperparameters, and evaluation. The paper points out the “number of lines of configuration can far exceed the number of lines of the traditional code” in a mature system, and we have observed precisely this in our prior experience building such systems.

@rishabh: Data dependencies cost more than Code dependencies: As ML systems grow, the output of one ML model becoming the input of another becomes a norm. A lack of tooling that can capture such dependencies coupled with the fact that small changes to the input of an ML model can sometimes have outsized, adverse effects on the output is a recipe for disaster. While we have seen some benefits from data versioning (of training/test data, but also of computed features used by downstream ML models), this remains a tough problem to solve.

@nihit: Monitoring and Testing. Unlike traditional software systems, it is hard to argue about the correctness of ML systems because of factors like population drifts and training data bias. Continuous monitoring of deployed models is essential to avoid silent failures. We discuss this in more detail in the latter part of this newsletter.

MLOps from Google

What happened

Google is the first major cloud provider, although not the first major company (see news from Nvidia recently), to target MLOps as a future growth direction. They’re releasing new services for AI Platform Pipelines, Continuous Evaluation, Continuous Monitoring, Metadata Management and Feature Stores, which all address known problems in the Software 2.0 stack.

What this means for you

If you’re training and deploying Machine Learning models using GCP’s AI platform, keeping an eye on these new services is a good idea. Depending on which service is more useful to you (Continuous Evaluation, if you want to be assured of high performing models in production; Feature Store, if you have multiple data science teams working with similar datasets), these could save valuable time and resources as your ML and data science teams scale.

What to Expect in the Future

We expect that other public cloud providers will get in on this action soon. While there have been attempts to solve MLOps problems using existing cloud services (see AWS blog and a post on Medium), having dedicated services for each of them is a natural evolution. This will lead to tighter and more integrated ML services by these cloud providers. As the public cloud providers (AWS, Azure, GCP) attempt to rise higher and higher in the stack, going from platform services to adding almost product like experiences, we expect to see a lot of competition from smaller startups who will be much more nimble and will own better experiences with end customers. It’s an exciting time to be working in this space!

Testing Software 2.0

What we learned

Unlike traditional software, where standardized best practices and frameworks for testing are well established (test coverage, unit tests, integration tests etc), Software 2.0 is a new world. We haven’t yet established best practices and metrics for model testing. This post establishes an important difference between model evaluation (metrics and plots which summarize performance on a test dataset and model testing (explicit checks for behaviors that we expect our model to follow). It introduces a framework for thinking about model testing: pre-train tests (tests that can be run without needing trained parameters) and post-train tests (tests aimed at sanity checking the learned model parameters).

Our experiences

@Nihit: Model testing (as opposed to model evaluation, which is common among ML practitioners) is a new concept. At least in my past experience, writing explicit tests and integrating them into ML development lifecycle has been uncommon. As the field matures, and questions around data privacy, bias in training data and explainability become central, I expect more rigorous testing to become part of developing ML models.

@Rishabh This framework of testing ML models is very interesting. In the past, teams I’ve worked on have written some post-train tests, but in a fairly ad-hoc fashion. For example, we would have some directionality tests for a trained Sentiment Analysis model (for example, the positive sentiment score for “This dress is beautiful.” should be strictly greater than “This dress is fine.”), but all tests were written by individual team members. Having a consistent, standardized framework for such tests will be a step in the right direction (maybe there will even be a Wikipedia page for Machine Learning Testing similar to Software testing).

The ML Ecosystem Multiverse

In this fascinating Twitter thread, Hilary Mason started a conversation about options for hosting ML models (we recommend actually clicking on the tweet and going through the discussion). Not surprisingly, there were at least 15 different solutions proposed, ranging from AWS and GCP’s ML offerings to startups focused on ML deployment like Algorithmia and Clever Grid all the way to rolling your own solution in the cloud (on AWS, Azure, GCP, Linode or Digital Ocean).

What we learned

The cloud ecosystem around productionizing ML models has many different players and the dust is yet to settle. The apparent lack of differentiation can make it hard for ML practitioners and startups to objectively figure out which solution to go with. Some factors you should consider as you figure out the best solution for your use case:

Minimizing development time (data annotation, model training & validation, productionizing & model serving support)

Easy to scale (when traffic increases, how easy is it to scale)

Support for varied ML frameworks (Scikit-Learn, PyTorch, TensorFlow, etc)

Monitoring and alerting support (when things go wrong, as they eventually do, how quickly can you fix them)

Community of users (infrastructure is hard – you want other people to help out if you run into issues)

What to expect in the future: We believe that over time, the various options in the marketplace will incorporate standardized best practices of ML deployment and achieve feature convergence. What might differentiate them is their friendliness to developers and their community of users.

Thanks!

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. This is our first issue and we would love to hear your thoughts and feedback. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup (open to DMs as well) or email us at mlmonitoringnews@gmail.com.