Issue #13: Feature Stores. Information Leakage. AWS Well-Architected Framework. Covid & ML.

Welcome to the 13th issue of the ML Ops newsletter. We hope this issue finds you well — as vaccinations continue to ramp up across the world, we hope that you receive one soon (if you haven’t already). 💉

In this issue, we cover a wonderful article on feature stores, explore information leaks in embedding models, continue our coverage of the AWS Well-architected framework whitepaper, discuss the impact of Covid on banks and more.

As always, thank you for subscribing. If you find this newsletter interesting, tell a few friends and support this project ❤️

Eugene Yan | Feature Stores - A Hierarchy of Needs

This is a really insightful read from Eugene Yan. We covered Feature Stores last year, and since then, the chatter around Feature Stores has only grown. Back then, based on this blog post from Tecton, we mentioned:

A feature store is an ML-specific data system that:

Runs data pipelines that transform raw data into feature values

Stores and manages the feature data itself, and

Serves feature data consistently for training and inference purposes

Eugene breaks down feature stores by viewing them through a “hierarchy of needs” lens. This means that feature stores fulfil several needs and that “some needs are more pressing than others” and thus have to be addressed first. Let’s look into these:

Access needs: At the most basic level, a feature store needs to provide users access to feature data and other information about features. This makes it easier for data scientists to discover work done in the past, understand how/when to use features and experiment easily.

Serving needs: Next, the models need to be served in production and features need to be made available to these models with high throughput and low latency. This might involve the movement of feature data from analytical data stores (Snowflake) to faster, “more operational” data stores (Redis). There might be data transformation needs addressed in this layer as well.

Integrity needs: The feature data being served to models in production needs to be fresh, accurate and available at all times. Advanced features would allow point-in-time correctness (providing a snapshot of how the data looked at an earlier time) and an understanding of train-serve skew.

Convenience needs: Features stores should be simple to work with for data scientists and software engineers. It should be intuitive to use features during development and serve them in production. Features should be easy to discover and when distributions change, users should know about it.

Autopilot needs: Tedious work for feature engineering should be simplified — backfilling data, monitoring and alerting, etc.

Discussion

We encourage you to read the original article if you want to dive deeper - it is superbly written and provides enlightening examples from major feature store deployments (Gojek, Doordash, Airbnb, Monzo, Uber, Netflix and others). However, here are a few things we find particularly interesting.

Feature Sharing

At Gojek, Eugene says:

Data engineers and scientists create features and contribute them to the feature store. Then, ML practitioners consume these ready-made features, saving time by not having to create their own features.

But then he goes on:

Not sure how I feel about this productivity gain, given my preference for data scientists to be more end-to-end.

This resonates with us. There is a balance between data scientists fully relying on available features as “raw data” vs experimenting, transforming data based on their intuition and really owning the models they build. We wonder if there are factors such as company size or the number of unique models owned by a team or the cost of recreating features that play a part in how much “feature sharing” is prevalent.

Development vs Production data

A common pain point for data scientists working in dev environments is that they don’t have access to production data. This leads to issues such as train-serve skews going unnoticed, or worse, data leaks in features. Having a feature store that can bridge these gaps is a huge win.

What is Day 1 vs Day 100 for Feature Stores?

There are open questions about when should teams start thinking about “feature stores”? There are aspects of a feature store (access to features, serving them in models) that are necessary when deploying a model, but should a team building their first model really think about buying/building a feature store?

Companies with relatively well-developed ML teams have likely thought about several of these needs already and might have their “unique” infrastructure that supports them. Is it even reasonable for them to integrate an external solution? Or is it worth it? If so, what is the path for them?

We would love to hear from you if you have thoughts on this!

Paper | Information Leakage in Embedding Models

Background

Embedding models learn mappings from the space of raw input data to an n-dimensional space (typically much lower than the dimensionality of the input), while preserving important semantic information about the inputs. Learning pre-training embeddings from large amounts of unlabeled data (often called “trunk models”) that can then be fine-tuned for downstream tasks has become a common practice in achieving state-of-the-art results on a variety of machine learning tasks.

The problem

This paper demonstrates that embedding models can often learn vector representations that leak sensitive information about the raw data. It introduces three classes of adversarial attacks to study the kind of information leaked by embedding models:

Inversion Attack: Think of embedding model as a functional mapping F: input data → n-dim output space. Embedding vectors can be inverted to partially recover the input data, given the embedding. Two types of attacks are studied under this scenario: white-box attack (where embedding model’s parameters are known) and black-box attack (where we only have the input raw data and output embedding representations). The paper shows that popular sentence embedding models like BERT allow recovery of between 50%–70% of the input words!

Attribute Inference Attack: Oftentimes, for privacy reasons, we may not want to reveal/log/store the raw input to a model, but only an n-dimensional embedding representation. However, the paper shows that embeddings can reveal sensitive attributes (metadata) about a raw input just from the embeddings. For example:

attributes such as authorship of text can be easily extracted by training an inference model on just a handful (10-50 examples per author) of labeled embedding vectors.

Membership inference Attack: In this kind of attack, the goal is to figure out if a given data point was part of the training set of an embedding model. The paper shows that, especially for infrequent/outlier training data inputs, it can be very easy to figure this out.

Proposed Mitigation

The paper proposes and evaluates adversarial training techniques to minimize information leakage in embedding models. In this training scheme, embeddings are trained jointly with an adversarial loss: a simulated adversary A, whose goal is to infer any sensitive information, is trained jointly with an embedding model M, while M is trained to maximize the adversary’s loss and minimize the learning objective. If you’re interested in a deep dive into the technical details, we highly recommend reading the paper.

Embedding Models are gaining widespread use in real-world AI applications, and for good reason: To the extent that we can train models to understand content and context about the real world, and map it to a low dimensional representation, it makes the downstream task of task-specific modeling simpler and more efficient. However, papers like the one above highlight the potential dangers, one we think will be important to address and mitigate before these models can be adopted in privacy-sensitive domains like healthcare.

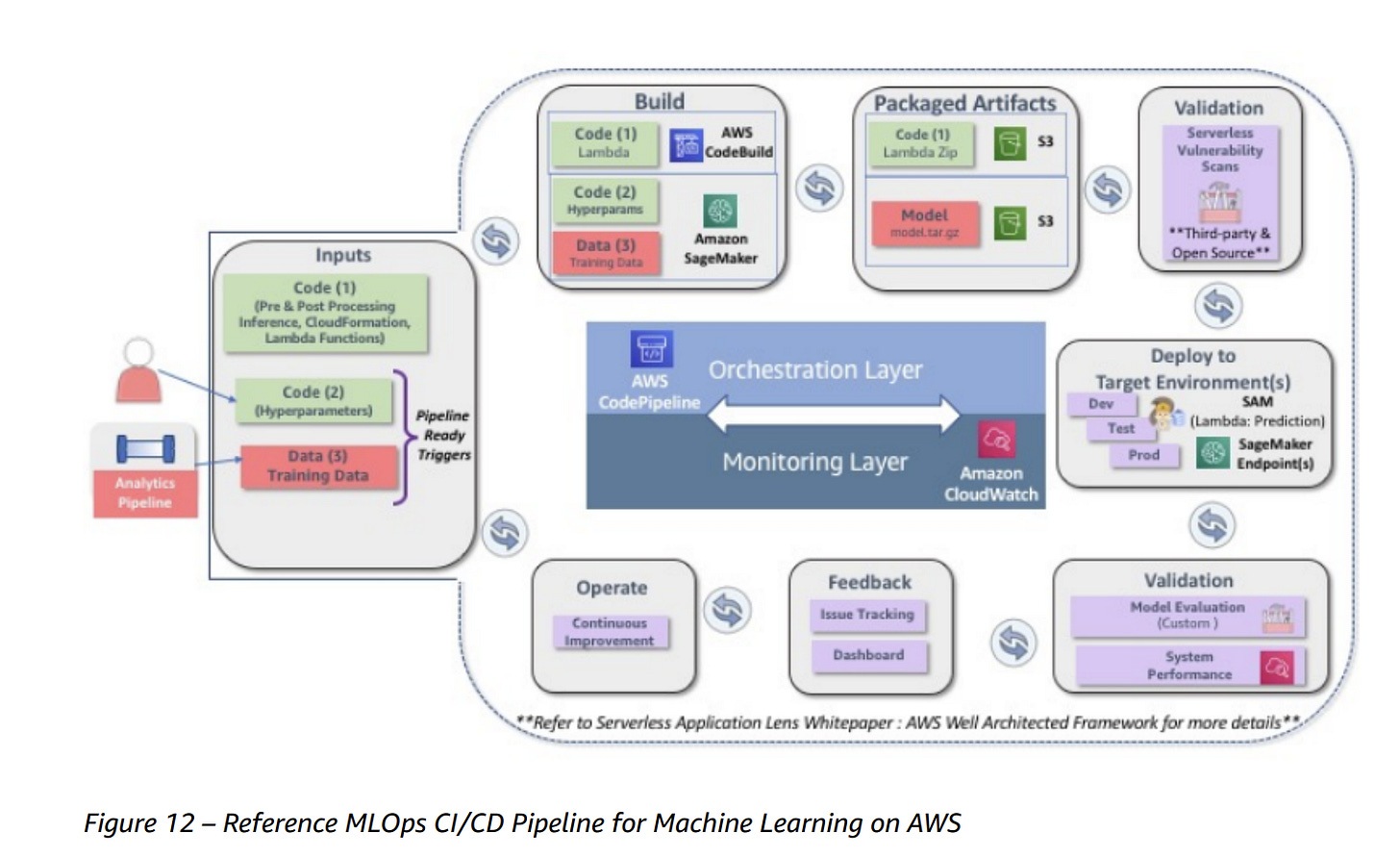

AWS Whitepaper | AWS Well-Architected Framework - Machine Learning Lens [Part 2]

In our previous issue, we introduced the Machine Learning Lens on AWS’s Well-Architected Framework. As they say:

In the Machine Learning Lens, we focus on how to design, deploy, and architect your machine learning workloads in the AWS Cloud.

In this issue, we will go over the five Pillars of the Well-Architected Framework for a machine learning solution. These five pillars are:

Operational Excellence

Security

Reliability

Performance Efficiency

Cost Optimization

The original document goes into significant detail, but here we will cover the considerations that were most interesting to us.

Operational Excellence

Establish cross-functional teams (since different personas are involved)

Identify the end-to-end architecture and operational model early (this ensures that business and technical objectives are aligned)

Establish a model retraining strategy (data drift is a given)

Document findings throughout the process and version all input and artifacts (reproducibility!)

Security

Restrict access to ML systems and ensure data governance (inference, model and data access in all environments available only to the right users)

Enforce data lineage (makes it easier to trace source data in case of errors)

Enforce regulatory compliance (privacy concerns and HIPAA, GDPR need to be respected)

Reliability

Manage changes to model inputs through automation (since data and code can both change, automation ensures reproducibility)

Train once and deploy across environments (models shouldn’t be retrained when moving them from dev to prod, since minor data changes/randomness can influence behaviour)

Ensure that inference services can scale easily

Performance Efficiency

Optimize compute for your ML workload (training and inference workloads often require different hardware)

Define latency and network bandwidth performance requirements for your models (if real-time inference is needed in applications, latency requirements are critical)

Continuously monitor and measure system performance (collection of system, service and business metrics for ML workloads gives us directions for improvement)

Cost Optimization

Define overall ROI and opportunity cost for ML projects ahead of time (is a team of two data scientists worth it or can using predictions from some API suffice)

Experiment with small datasets (fail fast / de-risk projects early)

Account for inference architecture based on consumption pattern (batch predictions vs real-time predictions need different hardware)

Final Thoughts

While this is a lot to take in, it can be valuable to look at end-to-end projects from the lens of these five pillars. We will continue to evaluate different frameworks for ML projects, but would love to hear from you on this as well!

How has COVID-19 affected the performance of ML models used by UK banks

The full report is available here, and we recommend clicking through and going through the insightful charts.

High-level overview

We asked banks how Covid-19 (Covid) had affected their use of machine learning and data science. Although these technologies will continue to have many benefits, over a third of banks reported a negative impact on model performance as a result of the pandemic.

Learnings

While half of the banks reported an increase in their perceived importance of Machine Learning (ML) and Data Science (DS) for them, only a third reported an actual increase in the number of planned or existing projects.

About 35% of banks reported a negative impact on ML model performance as a result of Covid. They note that is likely because of “major movements in macroeconomic variables, such as rising unemployment and mortgage forbearance”, which required ML models to be “recalibrated”.

Smaller banks showed a positive impact of Covid in their use of third-party solutions for different parts of their ML stack. While we don’t quite know the “magnitude” of impact, it reflects both a willingness to invest in ML applications and the openness to use third-party solutions.

Our Take

While the number of institutions surveyed isn’t very high (17 UK banks, 9 banks based outside the UK and 6 insurers although representing 88% of all UK banks assets), we believe the information to be directionally correct. While there are more and more use cases that fit ML applications well, the financial sector being more heavily regulated means that the safe deployment of models will be critical.

New tool alert: Sagify

We recently came across this neat MLOps tool that we wanted to share. Sagify is a command-line utility to train and deploy ML models on AWS Sagemaker. Think of it as a higher-level abstraction on top of AWS Sagemaker that hides away much of the low-level details. If you’re interested in a quick demo of the tool, check out this video or this intro article.

Fun: How to Break ML Models - Exhibit 9456

OpenAI recently released a fantastic post about Multimodal Neurons - parts of the neural network that respond to a “concept” in the input content in the same way, across modalities like text, image, video. We’ll cover this article in depth in the next edition of our newsletter but here we wanted to share this funny (and scary) anecdote highlighting just how easy it is to mount adversarial attacks. Just because the model can read a piece of text in the input saying “iPod” the model thinks it is actually looking at an iPod!

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. This is only Day 1 for MLOps and this newsletter and we would love to hear your thoughts and feedback. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup (open to DMs as well) or email us at mlmonitoringnews@gmail.com