Issue #26: Concept Drift. Anomaly Detection with Self-Supervision. NLP in Legal Applications. Models Per Customer?

Welcome to the 26th issue of the MLOps newsletter.

In this issue, we deep-dive into inferring concept drift, share a paper on outlier detection using self-supervised learning, discuss NLP applications to summarize legal documents, cover a recent article about customization in B2B ML applications, and more.

Thank you for subscribing. If you find this newsletter interesting, tell a few friends and support this project ❤️

Fast Forward Labs | Inferring Concept Drift Without Labeled Data

This is a wonderful introduction to the problem of concept drift (and how to infer it) from the team at Fast Forward Labs. We have covered concept drift before, but we recommend going through this post for a detailed overview, replete with experiments and nice visualizations. In the meantime, here’s a summary.

Motivation

The authors put it best:

After iterations of development and testing, deploying a well-fit machine learning model often feels like the final hurdle for an eager data science team. In practice however, a trained model is never final, and this milestone marks just the beginning of the perpetual maintenance race that is production machine learning. This is because most machine learning models are static, but the world we live in is dynamic.

What is Concept Drift?

This phenomenon in which the statistical properties of a target domain change over time is considered concept drift.

This has two parts, feature drift and “real concept drift”.

Feature drift refers to changes in the input variables (i.e. changes in P(X)). Such changes to input data over time may or may not affect the actual performance of the learned ML model, as seen in the image below.

Real concept drift refers to changes in the learned relationships between the inputs and the target variables (i.e. changes in P(y|X)). This type of drift always causes a drop in model performance. “Real concept drift” can happen at the same time as Feature drift - as seen in the image below.

Supervised Drift Detection

Often, teams will:

monitor a task-dependent performance metric like accuracy, F-score, or precision/recall. If the metric of interest deviates from an acceptable level (as determined during training evaluation on the reference window), a drift is signaled.

At this point, teams will retrain the model with fresh data, and performance levels will improve. However, there is a flawed assumption here: that true labels are instantaneously available after inference. Annotations can be expensive to obtain, sometimes requiring hired domain expertise. Aside from the cost, labels can sometimes take a long time to become available -- for example, it can take days to months for fraud to be reported or defaults on a loan to occur.

In such cases, detection of drift without any actual labels can be very helpful for ML teams.

Unsupervised Drift Detection

Without ground truth labels, any drift detection will be prone to some errors - both false positives (signaling drift when there is no impact on model performance) and false negatives (missing crucial concept drift problems). However, some techniques that might prove helpful:

Statistical test for change in feature space: Compare a time window for each input feature with a reference time window (or from the training data) to see if they come from the same distribution (using tests like the Kolmogorov–Smirnov test)

Statistical test for change in response variable: Similar to input features, we can run the KS test on the predicted probability values on fresh data and compare it with predicted probabilities from the past.

Statistical test for change in margin density of response variable: In the previous techniques, we were looking at changes in the entire probability distribution. However, changes in the probability values might not matter too much when the model is very confident (p < 0.1 or p > 0.9). In those cases, we might look for changes within a margin of probability values close to the decision threshold.

For more details (and their experimental setup on a toy problem), we recommend reading the entire post here. This is a really important topic that is going to gather more mindshare as more production ML systems are built.

Google AI: Discovering Anomalous Data with Self-Supervised Learning

Anomaly detection (or outlier detection) is a common application of Machine Learning with use cases across multiple domains - detecting fraudulent financial transactions, manufacturing defects, tumors in X-rays, and so on. Researchers at Google recently published a novel approach (subsequently published in ICLR ‘21) for anomaly detection based on self-supervised representation learning.

The Approach

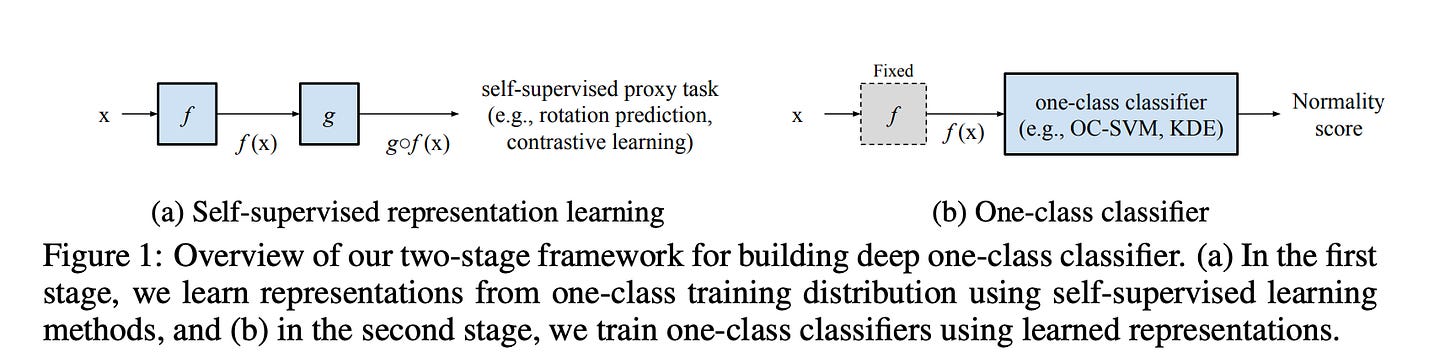

Google’s approach is based on a two-stage framework for deep one-class classification:

In the first stage, the model learns self-supervised representations from one-class data.

In the second stage, a one-class classifier, such as OC-SVM or kernel density estimator, is trained using the learned representations from the first stage.

In addition to the above framework, their work also includes a distribution-augmented contrastive learning algorithm to learn self-supervised representations that are more suited to outlier detection downstream tasks.

The Results

The researchers experimented with two representative self-supervised representation learning algorithms, rotation prediction, and contrastive learning, and evaluated the performance of one-class classification on the commonly used datasets in computer vision, including CIFAR10 and CIFAR-100, Fashion MNIST, and Cat vs Dog. Images from one class are given as inliers and those from remaining classes are given as outliers.

For a more in-depth technical overview, refer to their ICLR ‘21 paper Learning and Evaluating Representations for Deep One-class Classification. Additionally, the accompanying code for this paper can be found on GitHub.

Stanford HAI | Is Natural Language Processing ready to take on legal hearings?

We’ve covered Stanford HAI’s work previously in our newsletter. In a recent article, Stanford researchers Catalin Voss and Jenny Hong discussed the opportunity and challenges associated with applying natural language understanding techniques in reviewing legal hearings.

Problem

A parole hearing is a hearing to determine whether an inmate should be released from prison to parole supervision to serve the remainder of the sentence. During this hearing, a parole commissioner and a deputy review all the relevant history and life circumstances of the candidate, and then decide whether or not to grant parole. As outlined in the article, each such hearing generates a ~150-page transcript of the entire conversation so it can be reviewed if needed later on. In California alone, there are on average 5000-6000 parole hearings each year.

The governor’s office and parole review unit are tasked with checking parole decisions, but they lack the resources to read every transcript, so as a matter of practicality, they generally only read transcripts for parole approvals. If parole is denied, unless an appellate attorney or another influential stakeholder pushes for a review, the transcript is usually just archived.

Recon Approach

While manually reading the transcripts in their entirety is not feasible, the authors propose using NLP to “read” and summarize these transcripts (flag important factors and data points for each case). This can help scale parole review, and analyzing the outcomes of parole reviews at scale can help understand whether the process is fair/what kinds of biases exist. Further, it can help flag individual cases that seem like outliers. This proposal is discussed in more detail in a forthcoming paper available here.

NLP Challenges

While massive language models like BERT and GPT-3 have shown performance improvements across a large variety of tasks, the article outlines some existing challenges that would need to be solved for applying NLP to this problem:

Ability to maintain “state” over long texts. Legal transcripts can often be in the range of tens of thousands of words

Synthesizing information across various pieces of text to answer concrete questions

Multi-step (multi-hop) reasoning and query planning to answer questions.

Our view

It is far more common to read about the potential dangers and biases of applying machine learning and AI to new real-world use cases. It is refreshing, in our view, to read about this proposal which aims to reduce bias and improve the fairness of the parole process which currently has limited transparency and oversight. There was a conversation about this on Twitter:

Towards Data Science | Should I Train a Model for Each Customer or Use One Model for All of My Customers?

In this article, Yonatan Hadar discusses an interesting challenge faced by many ML teams building B2B software: what are the pros and cons of training a single model across all customers vs training a model per customer (and all strategies that lie in between)?

Here, we dissect the lessons from the post and add some of our own thoughts.

What’s the goal?

At a high level, the goal for any ML team is to create business value through models that perform well (i.e. generalize well) when deployed to production.

Some good properties of ML systems:

Models should work well for all customers

Engineering complexity should be minimized -- training, optimizing, deployment, and monitoring should be as easy as possible

Onboarding new customers should be easy (no “cold start”)

Legal and security constraints should be met

One Model Per Customer

This often performs the best for each customer, since the data distribution between train and test is closest when dealing with just one customer. In certain domains, this might be the only option available to teams, especially when mixing of data across customers isn’t allowed.

However, there is less data per customer, and teams have to be more careful when adding a new customer (is there enough data available, should simple heuristics be used while data is collected, etc). The engineering complexity might also be much higher since many more models need to be trained (and complexity only rises if model types and hyperparameter tuning strategies can be changed across customers).

One Global Model

From an engineering perspective, this is usually the simplest approach. The model is typically trained on a much larger dataset (comprising data from many, if not all, customers) and there is no additional training to be done when onboarding a new customer (the model is always ready to go).

However, the model is now trained on potentially different distributions (from different customers) leading to an overall loss of accuracy. There might also be subtle problems in the model that affect a small subset of customers (thus not impacting overall KPIs), and without proper monitoring will be hard to debug and fix.

One Model Per Customer Segment

This has the potential to be the best of both previous worlds. If segments are chosen appropriately, the data distributions will be relatively consistent across customers in a segment, and each segment may also end up with a large training dataset. With the right number of segments, the engineering complexity of the system can also be tuned.

On the flip side, choosing segments correctly is difficult since there are many ways to segment customers (geography, industry, etc). If done improperly, one might end up in a situation of the worst of both previous worlds.

Global Model + Transfer Learning

This is only applicable to deep learning models, but this has the potential for high performance on all customers with much fewer data points.

However, deep learning might not be the right solution in many cases (for example, when you need very low serving latency or easy explainability). This method is still fairly new, so some exploration might be needed for your use cases.

Our Take

ML teams are often operating with constraints that are unique to their industry and company. While there are many good ways to build and deploy ML systems, having an understanding of the trade-offs involved is useful.

Twitter | Bad Labels in Public Datasets

In this Twitter thread (and associated blog post), Vincent Warmerdam explores how easy it is to find incorrect labels in a publicly available dataset, even one that was curated by researchers from Stanford and Google.

On this Google Emotions dataset, the author trains a simple high bias, low variance model and then ranks examples where a very low confidence score was given to the correct class. This resulted in plenty of mislabeled examples, such as in the image below:

This is similar to (and inspired by) the work of the team behind labelerrors.com, where they show problems with many popular datasets such as CIFAR, MNIST, etc. If you’re directly using publicly available data, you might want to use some simple heuristics to ensure that label quality is at an acceptable level.

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup or email us at mlmonitoringnews@gmail.com. If you like what we are doing please tell your friends and colleagues to spread the word.