Issue #31: Refuel.ai. LLMs can label data better than humans. Autolabel.

Back with some news! We started a company called Refuel.ai - helping teams create clean, labeled datasets at the speed of thought.

Welcome to the 31st issue of the MLOps newsletter (hint: it’s a special one!).

Nihit and I have been busy building a company – Refuel.ai! We’re building a platform to clean, label and enrich datasets at human-level quality, using Large Language Models (LLMs). Great data leads to great models, but clean, labeled data is a huge bottleneck for machine learning teams.

We just launched out of stealth (media coverage) and are excited to share more details with you. In this issue, we’ll cover a benchmark for LLM-powered data labeling and Autolabel, a library to label, clean, and enrich data with your favorite LLM. Feel free to join us on Discord if you want to discuss LLMs or data labeling (or anything else) with us! 😊

Thank you for subscribing. If you find this newsletter interesting, tell a few friends and support this project ❤️

LLMs can label data as well as humans, but faster

Motivation

LLMs are an incredibly powerful piece of technology. While it is well known that LLMs can write poems and solve the BAR and SAT exams, there is increasing evidence suggesting that when leveraged with supervision from domain experts, LLMs can label datasets with comparable quality to skilled human annotators

What we did

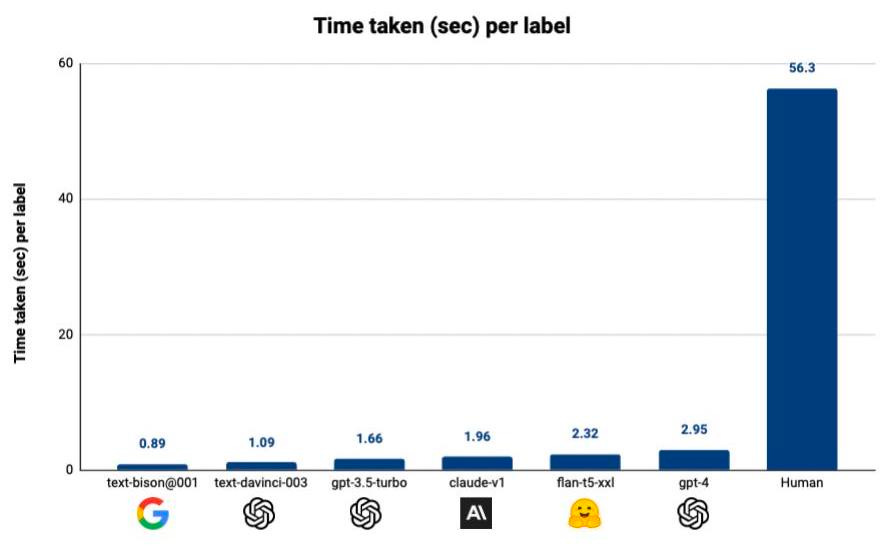

We evaluated the performance of LLMs vs human annotators for labeling text datasets across a range of tasks (classification, question answering, etc) on three axes: quality, turnaround time, and cost. We tested techniques for boosting LLM accuracy such as few-shot prompting, chain-of-thought reasoning, and confidence estimation, and propose a live benchmark for text data annotation tasks, to which the community can contribute over time.

Results

State-of-the-art LLMs can label text datasets at the same or better quality compared to skilled human annotators, but ~20x faster and ~7x cheaper.

For achieving the highest quality labels, GPT-4 is the best choice among out-of-the-box LLMs (88.4% agreement with ground truth, compared to 86% for skilled human annotators). For achieving the best tradeoff between label quality and cost, GPT-3.5-turbo, PaLM-2 and open-source models like FLAN-T5-XXL are compelling.

Confidence-based thresholding can be a very effective way to mitigate the impact of hallucinations and ensure high label quality.

Conclusion

We think that LLM-powered labeling is the future for a vast majority of data cleaning, annotation, and enrichment efforts, and will continue to track the performance of many LLMs across a diversity of tasks in this ongoing benchmark.

You can read this in tweet thread form here: https://twitter.com/nihit_desai/status/1669752203949793281?s=20

Autolabel: Python library to label, clean, and enrich datasets with LLMs

We open-source Autolabel, a Python library to label, clean, and enrich text datasets with any Large Language Model (LLM) of your choice. With a few lines of code, you’ll be labeling data at extremely high accuracy, but in a tiny fraction of the time compared to human annotation! Join us on Discord if you have any questions, or open an issue on Github to report a bug. If this is interesting, feel free to give us a star! ⭐

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup or email us at mlmonitoringnews@gmail.com.

If you like what we are doing, please tell your friends and colleagues to spread the word. ❤️