Issue #8: Toronto ML Summit. GPT-2. ML Feedback Loops. Explainability Struggles. Judgy AI.

Welcome to the 8th issue of the ML Ops newsletter which will be the final issue of 2020. Even with all that has happened this year, we have much to be thankful for and we are certainly grateful to all of you who support us by subscribing to this newsletter. Hope you have a wonderful start to the year 2021! 🎊

Now, for the topics in this issue. This week we dive into talks from the Toronto ML Summit, discuss implications of large language models like GPT-2, cover the importance of feedback loops in ML, have our musical tastes judged by an “AI” and more.

Thank you for subscribing, and if you find this newsletter interesting, forward this to your friends and support this project ❤️

Blog Post | Toronto Machine Learning Summit 2020

James Le attended the Toronto Machine Learning Summit 2020 and wrote this comprehensive post covering many interesting talks. While the post covers nearly 20 talks from the summit, we share our thoughts on the ones we found especially relevant to this newsletter.

Human-in-the-loop AI for comment moderation at the Washington Post

The Washington Post (WP) has more than 2 million comments in a month, a small percentage of which are submitted by trolls and bots and should be filtered/demoted. ModBot is WP’s solution to scalable comment moderation by combining machine learning with human moderators to moderate the quality of conversations.

While the ML architecture and modeling choices for solving this problem are interesting too, we want to focus on a specific aspect of the solution - having human moderators in the loop along with ML models, both during training and inference.

During training, ModBot uses human moderators to get accurate ground truth labels

During inference, human moderators act as a second pass check, to produce decisions on comments that cannot be auto-decisioned by the model. In this way, over time these human review labels can also be used to collect more training data and improve model performance over time.

This general setup of a combination of ML and human review to scale moderation is a common learning across multiple “integrity” domains - fraud detection in online transactions, detecting violence and hate speech in videos on Youtube, detecting misinformation on platforms like Twitter and Facebook etc. To what extent is this workflow “platformizable” is an open question. It is a space we’ll certainly be closely monitoring.

From Model Explainability to Model Interpretability

Professor Cynthia Rudin from Duke University discussed differences between explaining black-box model predictions and using inherently interpretable ML models when it comes to high-risk ML applications such as criminal justice.

The motivation for preferring model interpretability over post hoc model explanations:

Model accuracy and interpretability tradeoff: While this is often true (especially for unstructured data such as raw text, images etc), it is often not the case for structured data. In such cases, simpler “interpretable” models often have the same performance as more complex black-box models

Explainable ML methods provide explanations that are not faithful to what the original model computes. This must necessarily be the case, for otherwise, the explanation would equal the original model thereby making the original model redundant.

The talk also shared recent research advances in interpretable ML such as CORELS (Certifiably Optimal Rule Lists) which produces rule lists over a categorical feature space (rule lists are inherently interpretable) and This Looks Like That which takes an “instance matching” approach by dissecting an input image into prototypical parts and matching these to similar parts from a bank to make a final classification.

Evolution of Machine Learning Pipelines

Benedikt Koller from Maiot shared real-world learnings from putting deep learning models into production with a goal of ensuring reproducibility so that transitions from one experiment to another are as quick as possible.

Pipeline 0: Convert known-good code into a pipeline

Pipeline 25: Use declarative configurations instead of reusing code for faster reproduction

Pipeline 100: Use Apache Beam and Google Dataflow (which support Spark and Flink) to maintain acceptable speeds on TB-size datasets

Pipeline 101: Train models with distributed workers on cloud platforms

Pipeline 250: Cache each stage of the pipeline to enable faster iterations

Pipeline 1000: Automate the serving/deployment layer for end-to-end ML projects

This mirrors how different ML teams will evolve their pipelines as well. Check out Maiot’s open-source framework for reproducible pipelines called ZenML.

For a quick glance through all the talks from the Summit that the author covered, read his Twitter thread:

Berkeley AI Research Blog | Does GPT-2 Know Your Phone Number?

Background

The last couple of years have seen the rise of extremely large language models in NLP, with some of the largest ones coming from Open AI (the latest one being GPT-3). These models are trained on large corpora of publicly available text data (Common Crawl, books, Wikipedia, etc).

The Problem

In our recent paper, we evaluate how large language models memorize and regurgitate such rare snippets of their training data. We focus on GPT-2 and find that at least 0.1% of its text generations (a very conservative estimate) contain long verbatim strings that are “copy-pasted” from a document in its training set.

This memorization is an obvious concern with private data (user conversations, PII data) since the model might inadvertently regenerate sensitive information. However, a more immediate concern is the fact that the public data these models are trained on also include vast amounts of sensitive data -- emails, addresses, phone numbers, code, hacked or copyrighted information, etc.

Legal frameworks for addressing these concerns are very nascent or non-existent, so we expect the industry to grapple with them for the next few years.

Our Take

There aren’t too many “solutions” for this today. Our recommendations for folks using these language models in production would be threefold. First, be mindful about where all your training data is coming from (even for pre-trained models), and note down anything that might be sensitive. Second, in use cases that involve text-generation, have additional heuristics and checks that reduce the variations that can be generated by the overall system (especially if you are relying on the model to produce “factual” statements). Finally, you should have feedback mechanisms and monitoring set up so that unknown edge cases can be easily discovered.

Blog Post | Completing the Machine Learning Loop

This is a very well-written article from Jimmy Whitaker about the need to complete feedback loops in Machine Learning systems.

Machine learning models are never really “done.” They need to ingest new data to learn new insights, and that’s why we need to think of the process as a loop rather than a straight line forward. The process starts over as new data flows into the system, forming a continuous, virtuous circle of model creation and deployment steps.

And this ability to iterate (“completing the loop”) is the process that’s arguably broken in machine learning/data science today. That’s because it usually relies on a multitude of manual steps that are highly error-prone, and baking them all into a robust, production platform is even harder.

In other words, it’s all about the iteration.

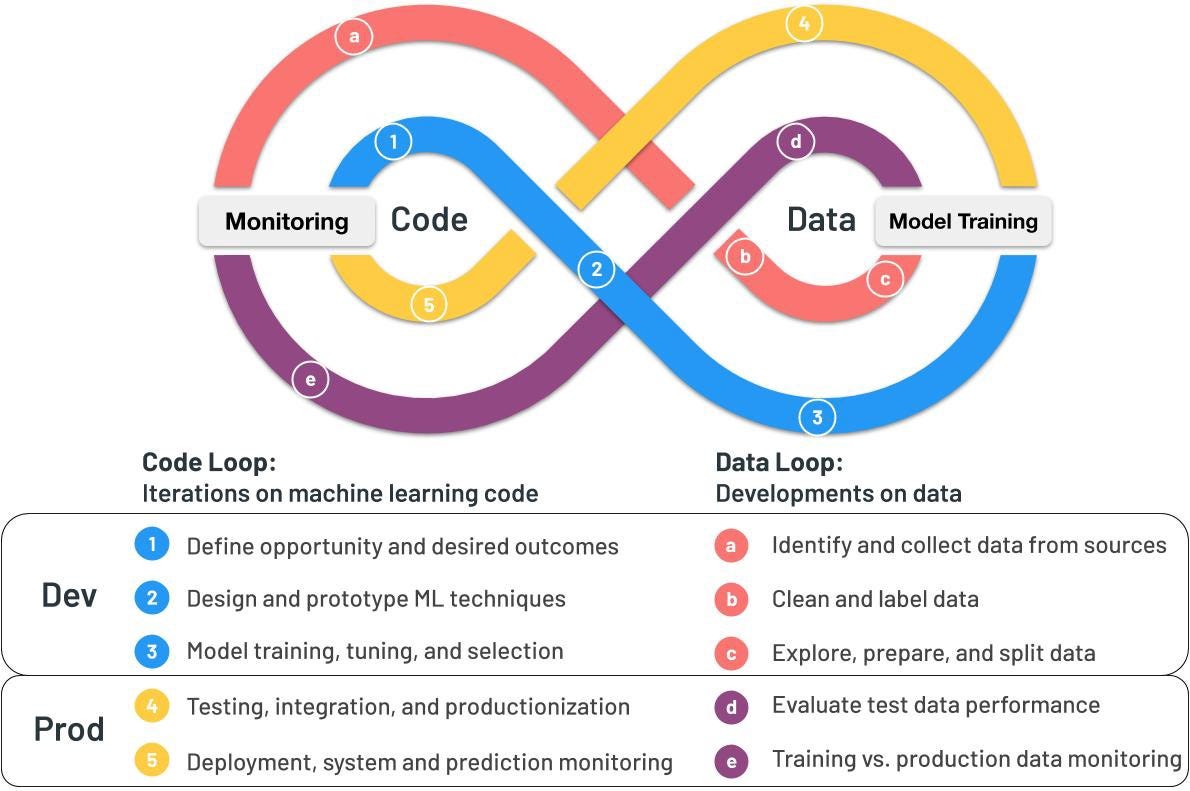

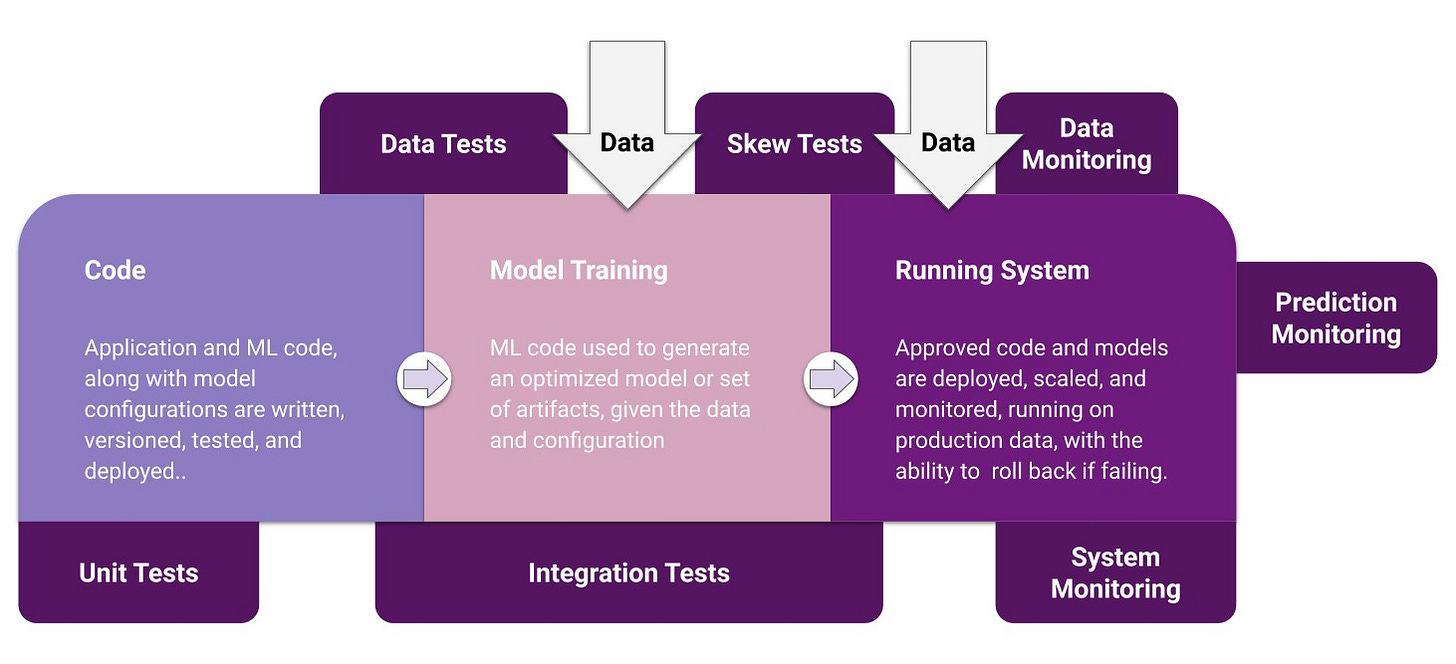

The article talks about how machine learning development is different from software development in that there are two moving pieces - code and data. Therefore, we need a code loop to produce high-quality software and a data loop to produce reliable, high-quality data that can be coupled for quick and reliable iterations.

Code vs Data

The article goes into some of the differences between data and code.

Data is quite different from code:

Data is bigger in size and quantity

Data grows and changes frequently

Data has many types and forms (video, audio, text, tabular, etc.)

Data has privacy challenges, beyond managing secrets (GDPR, CCPA, HIPAA, etc.)

Data can exist in various stages (raw, processed, filtered, feature-form, model artifacts, metadata, etc.)

There are many mistakes in systems caused by data issues rather than code issues.

Biased data is buggy data — if it’s unreliable or doesn’t represent the domain then it’s a bug.

And these data bugs need to be tested as well:

This post has a lot of wisdom in it - we would encourage you to read it!

Paper | The Struggles and Subjectivity of Feature-Based Explanations: Shapley Values vs. Minimal Sufficient Subsets

Explainability

We have discussed model explainability previously when covering the recent Executive Order on Trustworthy AI. We believe that the need for human-intelligible model predictions is only going to increase, as ML applications increasingly affect the physical world (e.g. self-driving cars). For anyone interested in an overview of current interpretability techniques, this book by Christoph Molnar is a fantastic resource.

This Paper

Here, we cover a recent work by researchers at Oxford University that compares and contrasts two well-known feature-based explanation techniques, Shapley explainers, and minimally sufficient subsets. Check out a discussion thread about the paper here. A few highlights that stood out for us:

Different methods can provide different “ground truth” explanations for a model’s predictions, despite the apparently implicit assumption that explainers should look for one specific feature-based explanation

Understanding the output of feature-based explainers on synthetic examples (where the ground truth is known) can be critical to ‘explain the explainers’. For example, how do they deal with redundant features? How do they deal with correlated features?

The use of multiple explanation methods together, and triangulating their output for a given prediction, might lead to a better understanding of the decision-making process of a model.

Our Take

This paper introduces an important insight to consider when deploying feature-based explainers for any real-world application: namely, that the choice of the technique can dramatically impact the nature of explanations. However, the paper does not explicitly suggest how to address this gap, or what set of criteria to consider when choosing among explainers. Further, doing this comparison of Shapley v/s Minimally sufficient subsets on a real-world dataset could have made the insights more compelling.

Fun | This AI judges your awful taste in music

HAL-9000, Alexa, Siri, Cortana, Jarvis. You’ve read about AI assistants that will radically improve your life. Perhaps you even use some of them. But we may just have a new winner on our hands - this “AI assistant'' uses the most advanced state of the art technologies (read: if-else decision trees) to have an informed conversation with you about your Spotify play history. Happy Holidays 2020!

This is what it had to say about @rishabh’s Spotify:

Other folks on the Internet haven’t fared much better.

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. This is only Day 1 for MLOps and this newsletter and we would love to hear your thoughts and feedback. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup (open to DMs as well) or email us at mlmonitoringnews@gmail.com.

Thanks for sharing my content!