Issue #23: AI Regulations. Efficient Active Learning. Model Health Assurance. Vertex Matching Engine.

Welcome to the 23rd edition of the MLOps newsletter.

In this issue, we cover updates on AI regulations and frameworks in the EU and the US, a recent paper on web-scale active learning, details about LinkedIn’s internal platform for model observability, and a summary of Google’s new scalable vector similarity search.

Thank you for subscribing. If you find this newsletter interesting, tell a few friends and support this project ❤️

AI Regulations in the EU and the United States

Demystifying the Draft EU AI Act

A few months ago, we had shared the AI regulations from the EU when they were still being considered (and some of the early reactions).

Now, Michael Veale has put together a fascinating deep dive into the Artificial Intelligence Act. We recommend reading through the Twitter thread (or their paper for the full analysis) -- our overwhelming takeaway is that writing policy is hard!

Let’s look at a couple of the specific shortcomings in the AI Act they write about:

The Act prohibits manipulative systems. In their formulation, being “manipulative” requires “intent” from the system and the system must exert influence through a “vulnerability” such as age, disability, or through “subliminal techniques”. However, there is no clause for whether the user was harmed in any way or not. They also explicitly exclude manipulation from systems based on ratings and reputation. The end result is that almost all online systems will be excluded from being judged under this Act (and, of course, no vendor will admit an “intent” to manipulate).

For high-risk AI systems (biometric identification, law enforcement, etc), the Act lays out “essential requirements” including data quality criteria of accuracy, representativeness, and completeness. However, the European Commission plans to ask two private organizations who will create a paid “harmonized standard”. Such private organizations are heavily lobbied and could drift away from the “essential requirements”, which is likely to make this regulation difficult to enforce.

United States GAO AI Accountability Framework

This is a report from the Government Accountability Office (GAO) that details an accountability framework for federal agencies when it comes to using AI responsibly.

We won’t go into the details here, but their focus on data quality, governance, monitoring, and performance tracking is spot on!

Paper | Efficient Active Learning with Similarity Search

In a recent paper, researchers from Stanford, Facebook AI, and the University of Wisconsin-Madison introduced SEALS (Similarity Search for Efficient Active Learning and Search).

Problem

For web-scale datasets, active learning approaches are often intractable due to size - billions of unlabeled examples. Existing approaches search globally for the optimal examples to label, scaling linearly or even quadratically with the size of unlabeled data. In effect, the time complexity of active learning for such use cases is O(dataset size * number of sampling iterations).

Proposed Solution

This paper improves the computational efficiency of active learning and search methods by restricting the candidate pool for labeling to the nearest neighbors of the currently labeled set. The intuition behind this idea is that learned embeddings from generalized pre-trained models can cluster many rare concepts. This structure can then be exploited to improve the efficiency of active learning: only considering the nearest neighbors of the currently labeled examples in each selection round allows us to eventually get to all, or most positive labels for a given concept, without having to exhaustively scan over all of the unlabeled data.

Results

The paper evaluated the proposed selection strategy on three datasets: ImageNet, OpenImages, and an anonymized and aggregated dataset of 10 billion publicly shared images on the web. We recommend reading the paper to learn more about the experiment details and parameters for each dataset, but the overall takeaway was that this approach achieved similar mean average precision and recall from downstream classifiers trained on the labeled datasets, as the traditional global approach (baseline) while reducing the computational cost by up to three orders of magnitude.

Risks

As identified by authors in the paper, this gain in computational efficiency does come at the cost of some added complexity that should not be ignored:

This approach requires a similarity search index that can index and search over the entire dataset. There are some open-source implementations of approximate nearest neighbor search that can be considered here.

There is a risk that embeddings used for this task do not effectively cluster examples that belong to a given concept. Just restricting labeling selections to nearest neighbors of the currently labeled data might not be an effective strategy.

LinkedIn Engineering Blog | Model Health Assurance at LinkedIn

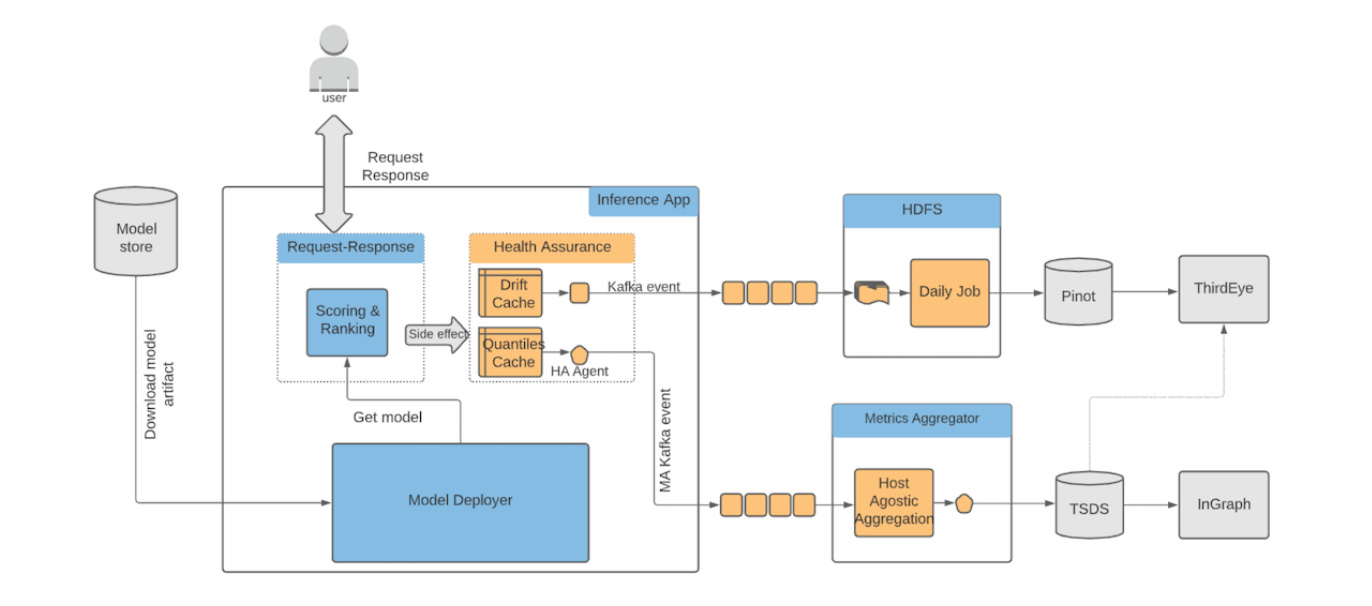

This recent article by LinkedIn shares some of the design principles and details of Model Health Assurance, LinkedIn’s in-house platform for ML model monitoring and observability (which is, in turn, part of its Pro-ML platform for training and serving ML models online). We share some highlights from the article below:

Motivation: Monitoring ML models post-deployment is a recurring problem for ML engineers at LinkedIn. Health Assurance (HA) is a platform to provide engineers with tools and systems that help them identify issues with production models faster and help debug them.

Core functionality: HA plugs into the online model inference code path to measure a variety of system indicators (e.g. traffic volume, latency, resource usage of hosts, etc) and data indicators (feature statistics, prediction drift, etc).

Consumption: A batch job computes feature and prediction statistics for all deployed models and pushes them to Pinot (an open-source distributed OLAP data store) and ThirdEye (LinkedIn’s in-house monitoring & alerting tool) which then alerts the downstream team that owns the model.

Easy onboarding: HA has two types of functionalities: a basic set of metrics & alerts that are autoconfigured and supported out of the box for any model that goes to production; and another set of custom metrics & features to track for a specific model (configured by ML engineers).

New Product Alert: Vertex Matching Engine by Google

Google Cloud recently announced the release of Vertex Matching Engine, a completely managed cloud solution for fast and scalable approximate nearest neighbor (vector similarity) search.

What is Nearest Neighbor Search?

Embedding representations, a learned mapping of raw content to a dense n-dimensional space, are a foundational tool for any ML engineer. While embeddings are useful for all kinds of machine learning tasks as general feature representations, one class of powerful applications they enable is embedding-based search.

Compared to traditional tf-idf based retrieval which searches a database of documents based on token match, an embedding-based search can rely on matching queries and documents based on semantic properties learned by the embeddings. To answer a query, we map the query to the embedding space and then find, among all database embeddings, the ones closest to the query (i.e. nearest neighbor search).

Overview of Vertex Matching Engine

While many models used to generate embeddings are open-source to used (e.g. Universal Sentence Encoder for text or ResNet for images), using them for search/similarity applications is still hard because traditional databases are not optimized for nearest-neighbor search queries.

Vertex Matching Engine is a managed solution by Google cloud for fast and scalable approximate nearest neighbor search. It is powered by ScaNN (the same technology that it uses internally for Google search, youtube, etc). It enables real-time searching - O(millisecond) latency - over O(billions) database size. Based on empirical observations from teams within Google, Vertex Matching Engine can achieve 95-98% recall compared to brute force nearest neighbor search. Furthermore, GCP handles all the scaling requirements based on database size and query load and allows for index updates with zero downtime.

To Get Started

If you’re interested in experimenting with Vertex Matching Engine or are evaluating it for a potential production use case, you can take a look at the documentation here, or this sample notebook.

Twitter Thread: Dealing with Uncertainty in ML

Sarah Hooker shares two interesting papers that were presented at ICML. Both papers explore what happens when real-world, noisy data is brought to bear on the ML training process.

The first paper discusses the process of up-weighting samples with a higher loss during training time with the assumption is that the model has more to learn from such examples. However, they find that when data is corrupted (or the level of noise is high enough), up-weighting can end up hurting the final trained model. High-quality data is a prerequisite for such training acceleration techniques.

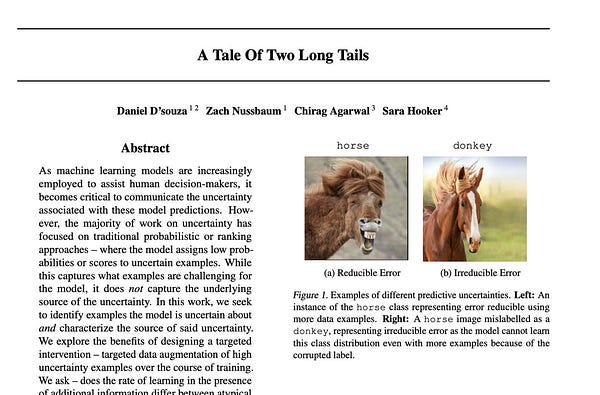

The second paper breaks down error cases into reducible errors (outliers and edge cases) and irreducible errors (super noisy or mislabeled examples). If such errors can be categorized, then model training could be improved by cleaning the noisy examples and augmenting the outliers or atypical examples.

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup or email us at mlmonitoringnews@gmail.com. If you like what we are doing please tell your friends and colleagues to spread the word.