Issue #3: State of AI. Behavioral testing ML models. Dynamic benchmarks. Data versioning. MadeWithML.

This was a fun issue for us to compile - we look at the role of MLOps in the State of AI Report, discuss how to sanity test ML models, dive into Facebook AI’s tool for using humans-in-the-loop to augment ML benchmarks and much more.

We are always excited to hear from you, our readers. We are continuing our tradition of including links and experiences that you shared with us (scroll to the bottom to find them!).

If you find this newsletter interesting, forward this to your friends and support this project ❤️

Deck | State of AI 2020

The State of AI Report covers the important trends in AI (Research, Talent, Industry and Government). The full deck is certainly worth a read (~175 slides or ~4 hours of reading and digesting), but here we’ll discuss the highlights for the MLOps-curious.

First, this:

“External monitoring is transitioning from a focus on business metrics down to low-level model metrics. This creates challenges for AI application vendors including slower deployments, IP sharing, and more.”

This leads to our favourite slide from the report:

The reason we like this so much is that this is a very forward-thinking approach from the FDA on how they imagine future “AI-first software as medical devices” to look. In short, it appears that they are thinking about moving away from “locked” products (where the underlying ML model doesn’t change much or at all) to one where “Good Machine Learning Practices” are employed (model QA, model versioning, retraining on new data and model monitoring).

Read through the rest of the report for interesting research directions in AI, impactful uses of ML in industry and how governments are navigating these technologies!

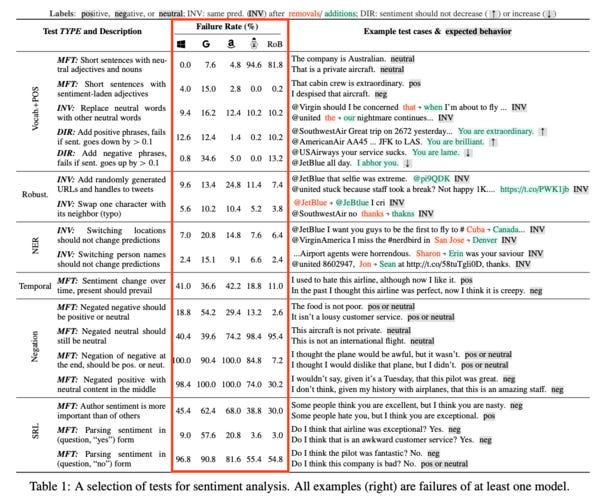

Paper | Beyond Accuracy: Behavioral Testing of NLP Models with CheckList

The Problem

The authors are “inspired by principles of behavioral testing in software engineering” to introduce CheckList, a task-agnostic methodology for testing NLP models (which evokes memories of the excellent Checklist Manifesto by Atul Gawande). The authors make it clear why such a CheckList is needed:

“Although measuring held-out accuracy has been the primary approach to evaluate generalization, it often overestimates the performance of NLP models”.

Simply put, while the traditional approach of training ML models on a subset of the complete labelled dataset and testing on the remainder held-out set, this usually does not generalize to examples “in the wild”.

The Proposed Solution

The checklist comprises simple tests for NLP problems that are grouped into three categories:

Minimum Functionality Test (MFT): These are equivalents of “unit tests in software engineering”, ie, “a collection of simple examples (and labels) to check a behaviour”. Examples for Sentiment Analysis would be:

Short neutral sentence: “The company is Australian” → neutral

Short extremely positive sentence: “That cabin crew is extraordinary” → pos

Invariance Test (INV): This is “when we apply label-preserving perturbations to inputs and expect the model prediction to remain the same.”. Examples for Sentiment Analysis:

Replace neutral word with similar neutral word: “@united the nightmare continues” - “the” + “our” → neutral

Add randomly generated Twitter handles to tweet: “@JetBlue that selfie was extreme.” + “@pi9QDK” → INV

Directional Expectation Test (DIR): This is similar to the invariance test, but here, “the label is expected to change in a certain way”. Example for Sentiment Analysis:

Add positive phrases, fail if sentiment goes down by a threshold: “@AmericanAir AA45 … JFK to LAS.” + “You are brilliant”. → no reduction

The Results

The authors tested commercial models from Amazon, Microsoft and Google and open-source models such as BERT and RoBERTa. They found astonishingly high failure rates on different kinds of tests for all these models - as you can see at the table above. Google’s cloud models appear to be much worse than the others... 🤔

Our Take

NLP models (even when trained on large amounts of data) may fail in obvious and non-obvious ways. If you plan to plug and play a pre-trained, easily available model, it might be better to perform some sanity tests using a CheckList like the one described in this paper (maybe try their Github repo?)

In a throwback to our first issue, we had covered Jeremy Jordan’s take on unit testing ML systems, which was inspired by the CheckList paper. This paper was interesting enough for us to cover it in more detail! You can also check out a talk by the author here from the Stanford MLSys Seminar Series.

Facebook AI Blog | Introducing Dynabench: Rethinking the way we benchmark AI

Benchmarks such as ImageNet and GLUE have been a critical driver of progress in AI. They establish a transparent and standardized evaluation procedure to compare different machine learning models on a specific problem.

The problem

Traditionally, benchmarks have been static (i.e. dataset doesn’t change over time). This presents multiple problems, the biggest among them being that they can become saturated (i.e. models can achieve or surpass human-level performance on that specific problem and test set). Benchmarks have been saturating faster and faster which makes them hard to use for evaluating progress in the field.

“It took the research community about 18 years to achieve human-level performance on MNIST and about six years to surpass humans on ImageNet, it took only about a year to beat humans on the GLUE benchmark for language understanding.“

Static → Dynamic Benchmarks

Dynabench, developed by Facebook AI, aims to solve these limitations by creating “dynamic benchmarks”. dynamically collecting benchmarking data with human annotators in the loop. Human annotators are tasked with finding adversarial examples that fool current models. Once the set of examples where a model used to fail previously are “solved”, a new iteration of this process is started. Some of the advantages of the proposed approach are that dynamic benchmarks don’t saturate over time, and are inherently geared towards adversarial testing of models, which static benchmarks often miss.

Our take

Dynamic benchmarks make a lot of sense, especially for models operating in “integrity domains” (detecting fraud, misinformation, spam etc) as the actors tend to be inherently adversarial and adaptive. This ties very well with the problem of data and concept drift that we discussed in our first issue of the newsletter. When developing ML models, we have seen first hand the importance of things like dataset recency (both training and testing). Another important advantage of dynamic benchmarks is that they can be adapted to evaluate models in a specific domain (@nihit: In the interest of full disclosure, I currently work at Facebook).

Version All The Things

In the above thread, Shreya Shankar highlights the importance of versioning when it comes to production ML models and associated datasets.

What resonated with us

Traditionally, in software engineering, version control is thought of mainly in the context of code and configs. But with ML, the final desired output is a combination of code, pre-processing scripts, configuration files, hyperparameters, training & validation data, model artifacts, etc. Most companies today have their own set of (or lack thereof) tools and processes to address these problems.

Our take

Managing data and model artifacts is a fundamentally different problem than managing code. While tools like DVC can tackle data version control, questions around “how similar are two datasets” or “what is the diff/delta between two versions of data from an ML model point of view” will require completely new products and approaches. Similarly, for model management, there are established products like Domino Data Lab and Algorithmia, but they come nowhere close to answering questions like “how is my current model different from the previous version?”. We are excited to see what the next generation of products in this space will look like.

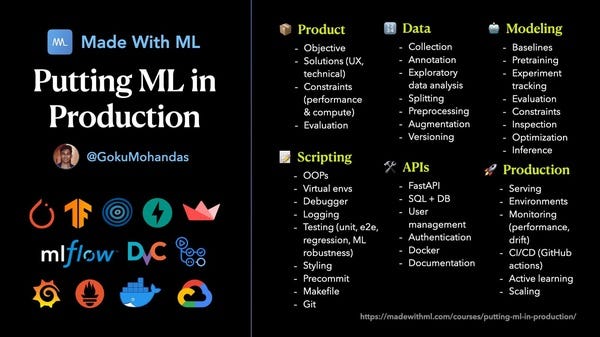

Resource | Putting ML in Production

MadeWithML is HackerNews/ProductHunt for the ML community. A good place for curated content related to ML (new products, tools, lectures, articles etc) largely maintained by the community.

They are starting a course on the real-world aspects of making Machine Learning work in practice, covering everything from product constraints, data collection & labelling, model training & experimentation, pushing models to production, scalability, monitoring & active learning. While the material is yet to be published, we think this can be a good resource for software engineers to get a quick introduction about a baseline set of best practices.

From our Readers

Data Pipeline Debt

Jayesh Kumar Gupta shared this with us:

@rishabh: While this is funny, it is also a little true. I will, however, say that there are good data pipeline solutions out there (check out Datacoral, where I work 😉).

Configuration Debt

Josh Wills shared some insights about configuration debt, which might be valuable for all our readers: Configuration debt is real, pervasive and not limited to ML Systems.

“We seem to be creating more and more configurations for all the things. What is Terraform, if not souped-up domain specific configuration?”

Solving this problem of managing configuration debt is likely going to be extremely important and valuable.

We can only say that we heartily agree and respond with the following:

Thanks!

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. This is only Day 1 for MLOps and this newsletter and we would love to hear your thoughts and feedback. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup (open to DMs as well) or email us at mlmonitoringnews@gmail.com.