Issue #4: Data Landscape. ML Stack. Imbalanced Datasets. Search@Airbnb. Ball vs Bald?

First things first, Substack is now the new publishing home for the ML Ops Roundup newsletter! Let us know if you face any problems when reading this content (as opposed to earlier issues) — your feedback is super valuable to us.

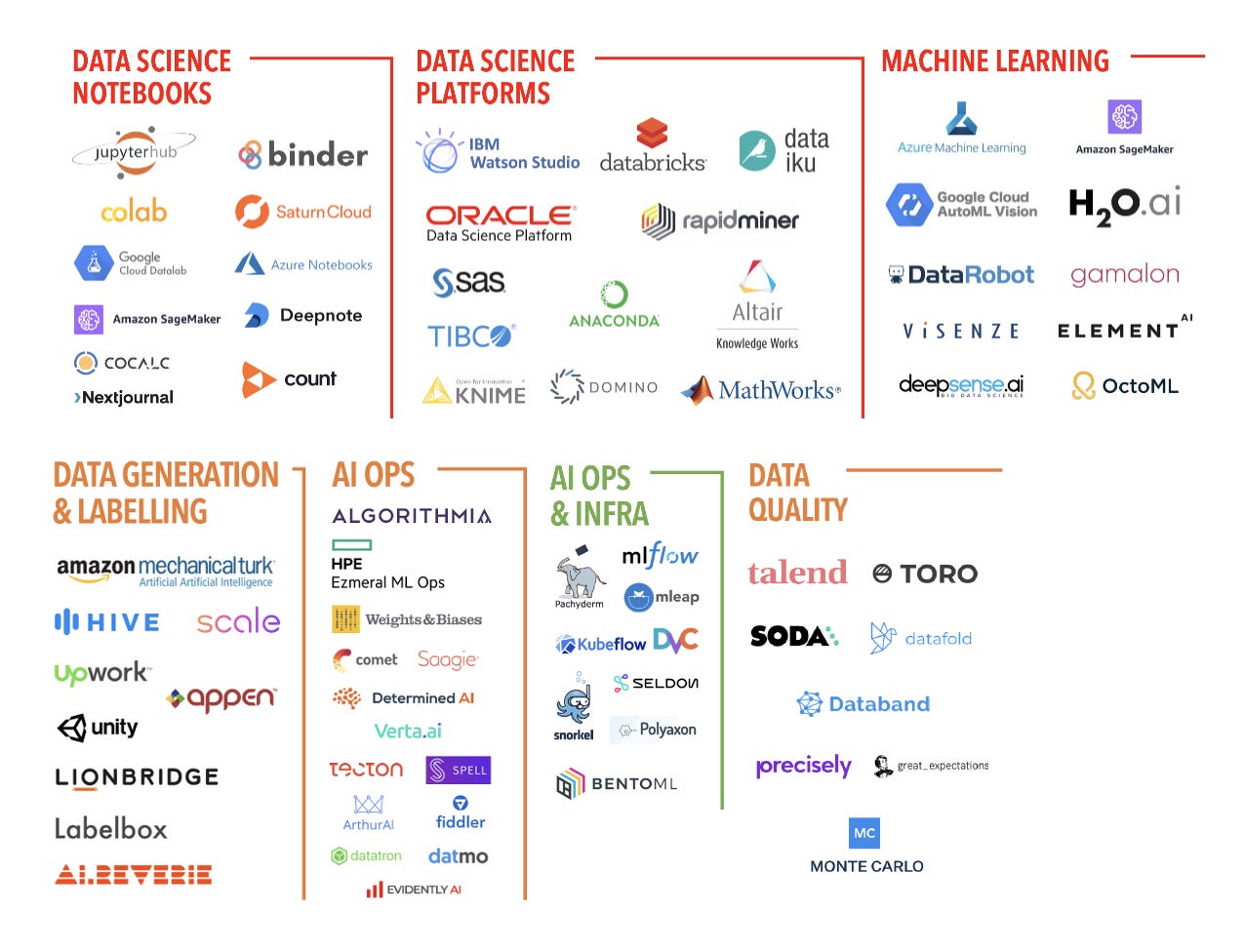

Now, for the content in this issue — we learnt about many new AI (and MLOps) companies when researching this issue (and you’ll see why). There are developments on moving towards a standardized ML stack, research on using active learning for skewed datasets and other fun stuff.

We are always excited to hear from you, our readers. We are continuing our tradition of including links and experiences that you shared with us (scroll to the bottom to find them!).

If you find this newsletter interesting, forward this to your friends and support this project ❤️

Matt Turck | The 2020 Data & AI Landscape

Matt Turck has been putting together these “Landscape” charts since 2012, and all of them have been fun reads. The list of companies from 2012 is completely unrecognizable today -- check it out for yourself here. What’s interesting is that his analysis from 2012 is still true today:

1) Many companies don’t fall neatly into a specific category

2) There’s only so many companies we can fit on the chart — subcategories ... would almost deserve their own chart.

3) The ecosystem is evolving so quickly that we’re going to need to update the chart often – companies evolve..., large vendors make aggressive moves in the space...

Anyway, let’s jump back into the Data and AI Landscape for 2020. There are lots of interesting companies in this chart, but let’s dive into the interesting trends for the MLOps-curious. As Matt Turck says:

There’s plenty happening in the MLOps world, as teams grapple with the reality of deploying and maintaining predictive models – while the DSML (Data Science Machine Learning)) platforms provide that capability, many specialized startups are emerging at the intersection of ML and devops

There are only a few subcategories that are relevant for MLOps, although we believe there will be many more categories (which will be much more crowded) in the coming years.

Let’s dig into these briefly:

Data Science Notebooks / Data Science Platforms / Machine Learning: These are very crowded categories, and all of these companies (and technologies) help data scientists get an environment to explore data in notebooks and train ML models easily (with hyperparameter tuning). It is hard to see much differentiation amongst these today. Differentiation might arrive in the form of costs, ease of setup and easy integration with where your data lives.

Data Generation & Labeling: Great to see this as a category -- labelled data is the lifeblood of ML systems!

AI Ops / AI Ops & Infra: This is a misnamed category -- the industry is grouping these companies under MLOps rather than AIOps (see the difference here). Great to see a lot of companies we have covered earlier here - Algorithmia (model management), DVC (data management) etc.

Data Quality: We have covered Great Expectations before, and we are convinced that this category is only going to expand in the future.

Finally, if you’re not satiated with AI companies yet, find an even more complete list here.

Pachyderm | Rise of the Canonical Stack in Machine Learning

With every generation of computing comes a dominant new software or hardware stack that sweeps away the competition and catapults a fledgling technology into the mainstream.

I call it the Canonical Stack (CS).

In this post detailing the Canonical Stack for machine learning, the ML lifecycle is broken into 4 stages:

Data Gathering and Transformation

Experimenting, Training, Tuning and Testing

Productionization, Deployment, and Inference

Monitoring, Auditing, Management, and Retraining

A number of companies (with their product maturity) are covered across the four stages in the image below. We will note that the author of the post works at Pachyderm, so maybe take the qualitative assessment with a grain of salt. 😃 You might also notice a strong overlap with the MLOps companies from the Data and AI Landscape from earlier.

Our Notes:

This post is a good start at attempting to define an ML stack. We are very early in this technological shift, and we should expect nothing to settle down for the next 5-10 years.

A couple of new data labelling products are now on our radar -- Superb.ai (for automatic labelling of data based on rules) and Ydata.ai (for generating synthetic datasets)

While experimentation and training frameworks/products are a dime a dozen, it is certainly less crowded when it comes to productionizing models and setting up ML pipelines. Will a generic orchestration tool like Airflow end up becoming an integral part of ML pipelines? Will KubeFlow become the one size fits all solution for ML pipeline deployments?

Finally, there are NO real solutions for ML monitoring yet. While logs from ML products can go into products like Splunk for analysis, this approach treats ML monitoring the same as standard software monitoring and that is certainly not enough. As the author points out, Black Swan events such as Covid can completely wreck ML models, and software monitoring doesn’t cut it.

Paper | On the Importance of Adaptive Data Collection for Extremely Imbalanced Pairwise Tasks

This is a recent paper by Stanford researchers Stephen Mussmann, Robin Jia and Percy Liang on the importance of using active learning when collecting a training dataset, especially in cases where the labels for the task are extremely imbalanced.

Problem

Pairwise classification tasks such as paraphrase detection, near-duplicate detection and question answering have extreme label imbalance (most pairs of texts are negatives/not matches). In such a case, i.i.d sampling of data for training can lead to extreme class imbalance. Naively constructing balanced datasets can create a large train/test dataset mismatch leading to poor model performance on test sets

Solution proposed in the paper

The paper proposes an adaptive (distinctly non i.i.d sampling) data collection approach using a combination of three building blocks:

Active Learning: At training time, the system has query access to a large unlabeled dataset. i.e., the ability to collect labels on a limited subset of the unlabeled data

Uncertainty Sampling: Balanced collection of training data is enabled by uncertainty sampling. This prioritizes querying labels for in the above step, on data points on which a model trained on previously labelled data has high uncertainty

Nearest Neighbor Search: This is an optimization step to lower the computational cost of the uncertainty sampling mentioned above. An n-dimensional dense representation of a document (output of a fine-tuned BERT embedding model) is used to create an index for nearest neighbor search using FAISS, a popular open-source library for efficient nearest neighbor search and clustering.

Using the approach outlined above, the authors show that they are able to improve the average precision to 32.5% and 20.1% average precision for QQP and WikiQA datasets respectively (question answering datasets) whereas state-of-the-art baselines only achieve an average precision of 2.4%.

Our Take

This ties very well with the importance of dynamic test benchmarks, that we discussed in our previous issue. We believe uncertainty sampling-based active learning combined with an online-learning setting can be an effective tool to counter problems like data drift and concept drift which degrades model performance on live traffic.

Airbnb | Machine Learning-Powered Search Ranking of Airbnb Experiences

We love to read about and share with our readers, examples of real-world ML systems. This is a really good post about how Machine Learning powers Airbnb search, arguably their most important feature. While the article touches upon a lot of aspects specific to search & personalization, we highlight our takeaways in the realm of monitoring and explainability.

Our Takeaways

Machine Learning-based Search Ranking can have many flavours depending on the choice of the models, infrastructure and level of personalization. It often helps to start simple and choose a level of complexity that’s right for the amount of training data available, and the number of entities to be ranked.

It is valuable to be able to monitor drastic changes in search result ranking, and explain when they do. As is noted in the article, in Airbnb’s case this helps give hosts concrete feedback when their listing improves/declines in ranking

Such reporting and visibility can also lead to concrete product changes. The article shares tracking rankings of specific groups of Experiences in their market over time, as well as values of features used by the ML model. Airbnb noticed that “that very low price Experiences have too big of an advantage in ranking”. In order to address this, ML engineers removed price as a feature in the ML model and retrained it

Fun | Fears about Artificial General Intelligence might be overstated: Exhibit no. 9341

You’ve read about it. AI is coming for our jobs, and possibly our lives too! The complexity of the real world though, is a tad bit harder to model than it might appear at first.

Recently, Caley Thistle, a Football club in Scotland, announced that it was replacing human camera operators to AI-powered cameras that can track the motion of the football. The fans experienced the cameras in full swing in a game on Saturday … as the camera kept mistaking the linesman's bald head for the ball.

A mostly innocuous and somewhat hilarious glitch, but one that highlights the many issues we have talked about in past issues: the perils of real-world data being nothing like training data, model overfitting, and the need for robust dynamic benchmarks for model testing.

From our Readers

FDA + ML

Ali Chaudhry shares a post by one of his coworkers, Sam Surette, which goes into detail about how the FDA is approaching machine learning software in healthcare. He talks about “adaptive AI”, with the following characteristic:

When an algorithm encounters a real-world clinical setting, adaptive AI might allow it to learn from these new data and incorporate clinician feedback to optimize its performance

It is interesting to hear that changes to the model would be deployed “only after successfully passing a series of robust automated tests” which would put Adaptive AI on a “leash”.

Definitely check this out if you’re interested in AI for healthcare.

Are your ML models perfect?

Another tweet shared with us by Ali Chaudhry. We won’t even bother saying anything here. 🤐

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. This is only Day 1 for MLOps and this newsletter and we would love to hear your thoughts and feedback. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup (open to DMs as well) or email us at mlmonitoringnews@gmail.com.