Issue #11: Big Ideas 2021. ML Test Score by Google. Security for ML Systems. BudgetML.

Welcome to the 11th issue of the ML Ops newsletter.

In this issue, we cover the “Big Ideas 2021” report from ARK (with a focus on deep learning), share a scoring rubric for measuring the maturity of production ML systems, discuss core concepts of an ML monitoring solution and share a thought-provoking article on security considerations in ML systems.

Thank you for subscribing, and if you find this newsletter interesting, forward this to your friends and support this project ❤️

Big Ideas 2021: Deep Learning

ARK Invest is an asset management firm founded in 2014, with an investment strategy that focuses on disruptive innovation and its applications. ARK publishes an annual “Big Ideas” report, sharing their thesis (in a similar fashion to Mary Meeker’s famous interest trends reports). Big Ideas 2021, which focuses on wide-ranging technologies from Deep Learning to Gene editing, is fascinating and we recommend reading it in its entirety. Here, we share our views specifically on Deep Learning.

Highlights

Deep Learning is enabling the creation of a new generation of computing platforms such as Conversational AI (Smart speakers like Alexa answered ~100 billion queries in 2020) and Self-driving cars (Waymo self-driving cars have collected ~20 million miles in select cities so far)

Controlled for model size and training data, Deep Learning model training costs have been declining at an astonishing 37% per year. On average, model size has been increasing much faster resulting in a net increase in cost to train and deploy state of the art deep learning models

Deep Learning is moving from vision to harder domains like language (GPT-3), gameplay and planning.

ARK believes that deep learning will add $30 trillion to equity market capitalizations during the next 15-20 years.

Our take

We share ARK’s optimism about the promise of the deep learning revolution and the profound positive impact it can (and most likely will) have. However, as ARK notes, deep learning is a profound shift in how software gets created - from the software being written by humans to being learned from data. We need an ecosystem of tools and platforms to write software 2.0 at scale - everything from IDEs to CI/CD and monitoring tools.

Perhaps not a surprise to readers of this newsletters, but one set of problems that we believe are yet unsolved are around MLOps - a set of processes, tech, tools to sustain the ideal workflow to develop and deploy software 2.0. For another perspective on these challenges, check out Google Cloud’s exhaustive primer on MLOps, why it matters and the stages of maturity that companies go through as they deploy these solutions.

Paper | What’s your ML Test Score? A rubric for ML production systems

This is a Google paper from 2016, and even though 5 years have passed, it remains a valuable way to look at the maturity of ML systems. We will let the authors explain their motivation:

Using machine learning in real-world production systems is complicated by a host of issues not found in small toy examples or even large offline research experiments. Testing and monitoring are key considerations for assessing the production-readiness of an ML system. But how much testing and monitoring is enough? We present an ML Test Score rubric based on a set of actionable tests to help quantify these issues.

Their tests are grouped into four sections and using the minimum score across the four sections, they award you a grade from 0 points: More of a research project than a productionized system (yikes!) to 12+ points: Exceptional levels of automated testing and monitoring (bravo!).

They assume general best practices around software engineering (unit tests, well-defined experiment and release process), so all the tests are ML-related. Now, for the four sections and a subset of the tests in each section. Read the full paper for all the details.

Tests for Features and Data

Since ML systems rely on data as well as code, we need data tests aside from regular software unit tests.

Test that the distributions of each feature match your expectations.

Test the relationship between each feature and the target, and the pairwise correlations between individual signals

Test the cost of each feature (what it adds to inference latency or RAM usage)

Test that your system maintains privacy controls across its entire data pipeline

Test the calendar time needed to develop and add a new feature to the production model

Tests for Model Development

Best practices are still being developed, and while it is tempting to use a single metric for judging the performance of a new model, there are other tests to keep in mind:

Test that every model specification undergoes a code review and is checked in to a repository

Test the relationship between offline proxy metrics and the actual impact metrics

Test the impact of each tunable hyperparameter

Test the effect of model staleness (does it matter if the model was trained yesterday versus last month)

Test against a simpler model as a baseline

Test model quality on important data slices

Tests for ML Infrastructure

ML systems are complex and tests for the complete infrastructure are important.

Test the reproducibility of training (are there differences between two similar model trained on the same data)

Unit test model specification code

Integration test the full ML pipeline

Test model quality before attempting to serve it

Test models via a canary process before they enter production serving environments.

Test how quickly and safely a model can be rolled back to a previous serving version

Monitoring Tests for ML

Test that data invariants hold in training and serving inputs

Test for model staleness

Test for NaNs or infinities appearing in your model during training or serving

Test for dramatic or slow-leak regressions in training speed, serving latency, throughput, or RAM usage

Test for regressions in prediction quality on served data

Our take

These are great guidelines to keep in mind. It is unlikely that production ML systems today pass all these tests, but we hope that this is the direction where they will move towards. A key topic that is missing in this paper is the management of human annotations. There are many use cases where human-in-the-loop processes need to be set up, and they have very different characteristics to systems where implicit labels are collected.

Jeremy Jordan: A simple solution for monitoring ML Systems

We have covered Jeremy Jordan’s thoughts on testing ML Systems earlier. In this issue, we wanted to share this article he wrote recently about the core components of any ML monitoring solution. The article is a detailed step by step guide/tutorial to set up model monitoring and visualization using Prometheus and Grafana, and we recommend reading it in its entirety.

Highlights

When setting up an ML monitoring solution, the first question perhaps to consider is “what do I want to monitor?”. The article introduces a helpful framework to think through this:

Model Metrics - prediction distributions, input features, model performance in cases where ground truth is available.

System metrics - error rate, latency, exceptions etc.

Resource metrics - CPU, GPU, RAM, Network transfer.

Conceptually, it is helpful to break down the solution into two parts:

Collecting & Storing Metrics -- typically done in near-realtime (but preferably asynchronously so as to be non-blocking). The article above uses Prometheus, an open-source monitoring service and time series database that collects metrics data from service endpoints and makes them available for querying later.

Visualization -- Consuming the logged metrics, typically via dashboards. The article above uses Grafana, another popular open-source tool for creating dashboards on top of Prometheus.

The importance of logging raw data:

For full visibility on your production data stream, you can log the full feature payload. This is not only useful for monitoring purposes, but also in data collection for labeling and future model training.

Resources for production-grade monitoring solutions: The article shares multiple resources to learn more about monitoring ML systems. We share a few that we especially liked:

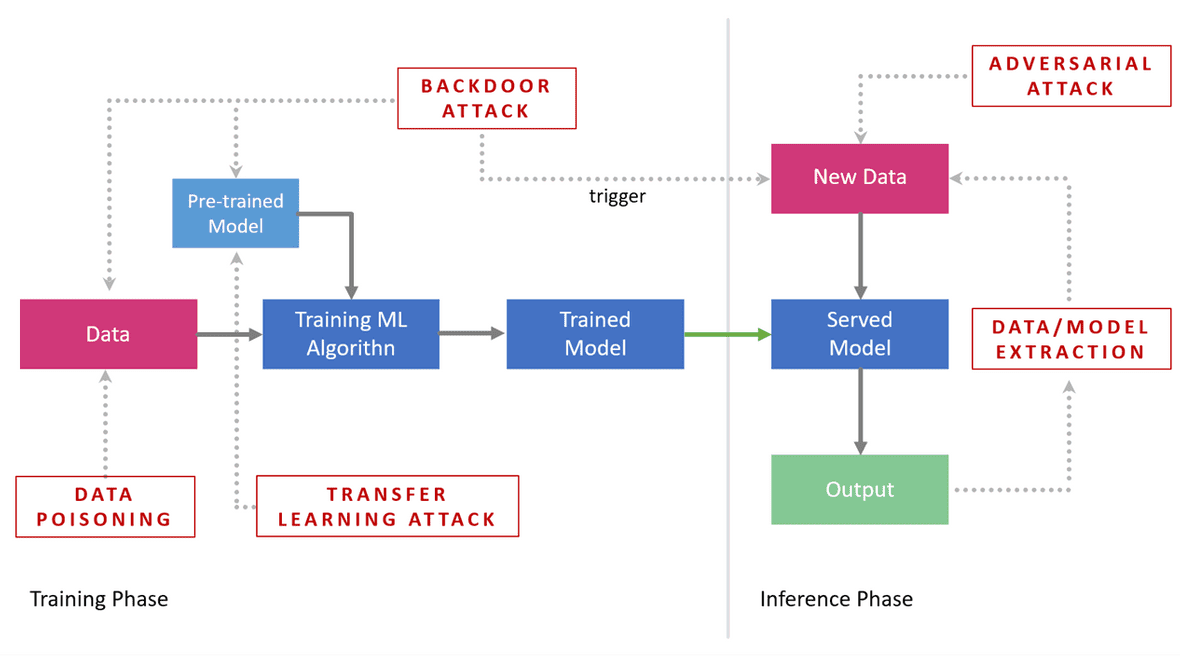

Machine Learning Systems: Security

Security is a topic that isn’t discussed as much as it should be. This is a great article by Sahbi Chaieb that provides an overview of the different vulnerabilities and some active research areas that might supply some solutions.

Why does it matter?

As the author writes:

Machine Learning models are more and more integrated in sensitive decision-making systems such as autonomous vehicles, health diagnosis, credit scoring, recruitment, etc. Some models can be trained on personal data like private emails, photos and messages from social media.

These vulnerable systems can lead to sensitive data or model parameters being extracted or models being corrupted or tricked.

What are the threats?

Data Extraction: Deep learning models can often memorize details from the training data (we covered this in an earlier issue where a paper showed GPT-2 memorizing phone numbers).

Model Extraction: By querying models through prediction APIs (such as the ones provided by public clouds), an equivalent ML model can be extracted. These attacks are successful against many types of models: logistic regressions, decision trees and neural nets, amongst others.

Model Tricking: Adversarial attacks are well-documented at this point. Many computer vision models have been tricked by inputs that have been imperceptibly changed such that the label produced is completely different from the original image. See image below for an example.

Model corruption: Training data can be “poisoned” by adding corrupted data (something we covered in an earlier issue as well) the final model can end up with incorrect predictions on certain inputs. If a pre-trained model is corrupted using “poisoned” data, then any model learnt by transfer learning will be corrupted as well.

Federated learning poses its own set of challenges - how do you secure training when it is happening on multiple machines! We need more research in this area to understand any theoretical safety bounds if they exist.

How can we secure models?

There are some simple ways to address some concerns. For example, by adding randomness to input data while training can protect from random perturbations at inference time. Similarly, training with adversarial samples can improve robustness against those kinds of adversarial attacks.

Some techniques like differential privacy (which reduces reliance on individual example) and homomorphic encryption (which allows computation on encrypted data) are very interesting, and we hope to learn and share more about them in the future.

From the community

Python’s Dominance in ML

One of our readers, Luke Merrick, posted this article on the dominance of Python in the ML ecosystem (and potential challengers to Python that might be around the corner). Worth a read!

ML Inference on a budget

This is a nifty library from Hamza Tahir for deploying ML models really easily (and cheaply by using preemptible instances) in Google Cloud (h/t: MLOps Slack community).

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. This is only Day 1 for MLOps and this newsletter and we would love to hear your thoughts and feedback. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup (open to DMs as well) or email us at mlmonitoringnews@gmail.com