Issue #19: MLOps Tooling. Vertex AI. Explainable ML in Deployment. Algorithmic Justice.

Welcome to the 19th issue of the MLOps newsletter.

In this issue, we will cover an insightful perspective on the MLOps tooling landscape, dive into a recent announcement from Google, discuss explainability in real deployments, share the news on a proposed piece of legislation, and much more.

Thank you for subscribing. If you find this newsletter interesting, tell a few friends and support this project ❤️

Lj Miranda Blog | Navigating the MLOps tooling landscape

Here, we will be summarizing Lj Miranda’s wonderful 3-part series on navigating the MLOps tooling landscape (Part 1, Part 2, Part 3). He starts by looking into who these tools are for, then categorizes the tools into a neat 2x2, and finally lays out a framework for deciding what tools to use and why.

Who are these tools for and what is the eventual goal?

Miranda focuses on two personas: the ML Researcher and the Software Engineer. The ML Researcher wants to focus on training models and creating new features, which is what she is trained for. The software engineer, on the other hand, wants to have a seamless process for getting models into production.

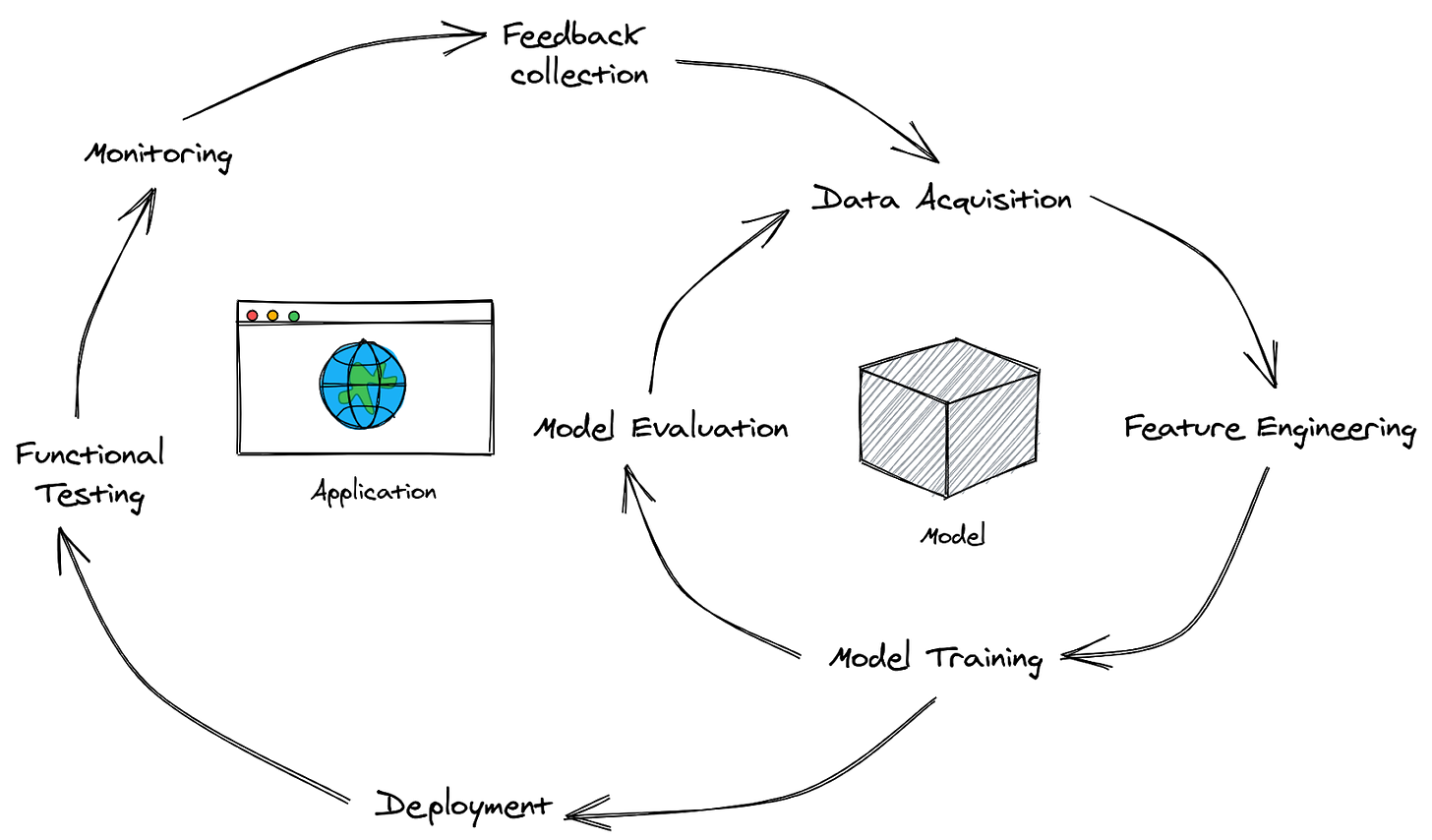

The software engineer is dealing with the loop on the left, while the ML Researcher is dealing with the loop on the right. Together this constitutes the ML lifecycle, and the aim for MLOps tooling is to improve this lifecycle.

How do you categorize these MLOps tools?

Miranda looks at two axes to divide up all MLOps tools. First, what type of artifact do they help produce -- software or models? Second, what is the scope of the tool -- piecemeal, that is affecting only one or two processes in the ML lifecycle or all-in-one, providing an end-to-end ML solution?

This leads to four quadrants:

Cloud Platforms: This includes general-purpose cloud providers such as AWS, GCP, Azure along with other Big Data focused platforms such as Cloudera and Paperspace. Miranda also includes Cloud-based ML Platforms such as AWS Sagemaker here, although we would choose to include them in the next category.

ML Platforms: This includes tools that address multiple components in the ML lifecycle such as ClearML, Valohai, and orchestration framework specifically addressing the ML process, such as Kubeflow and Metaflow.

Specialized ML tools: These are tools that address a very specific component in the ML lifecycle. Weights and Biases for experiment management, DVC for data versioning, Prodigy for data annotation, etc.

Standard SWE tools: This includes orchestration tools such as Airflow and CI/CD tools such as Jenkins.

How to pick MLOps tools?

Miranda introduces the Thoughtworks Technology Radar as a methodology to decide which tools to adopt, which ones to trial, which to assess, and which to hold out on. We’ll let you read the post if you’re interested in the full analysis but will share his build vs buy criteria here.

Don’t build MLOps tooling if it isn’t your core business -- leave it to the companies focusing 100% of their effort on the problem.

Build integrations and connectors between tools -- this allows you to personalize your tooling choice for your business use cases and best utilize this nascent industry.

Buy specialized ML tools first -- they are easier to plug in and out and work best with existing workflow in your organizations. This is sage advice!

Google Cloud launches Vertex AI, a managed Machine Learning platform

At Google I/O this year, Google announced Vertex AI, a new managed machine learning platform on top of GCP for companies to train, deploy and maintain their AI models. Andrew Moore, vice president, and general manager of Cloud AI told Techcrunch:

“We had two guiding lights while building Vertex AI: get data scientists and engineers out of the orchestration weeds, and create an industry-wide shift that would make everyone get serious about moving AI out of pilot purgatory and into full-scale production”

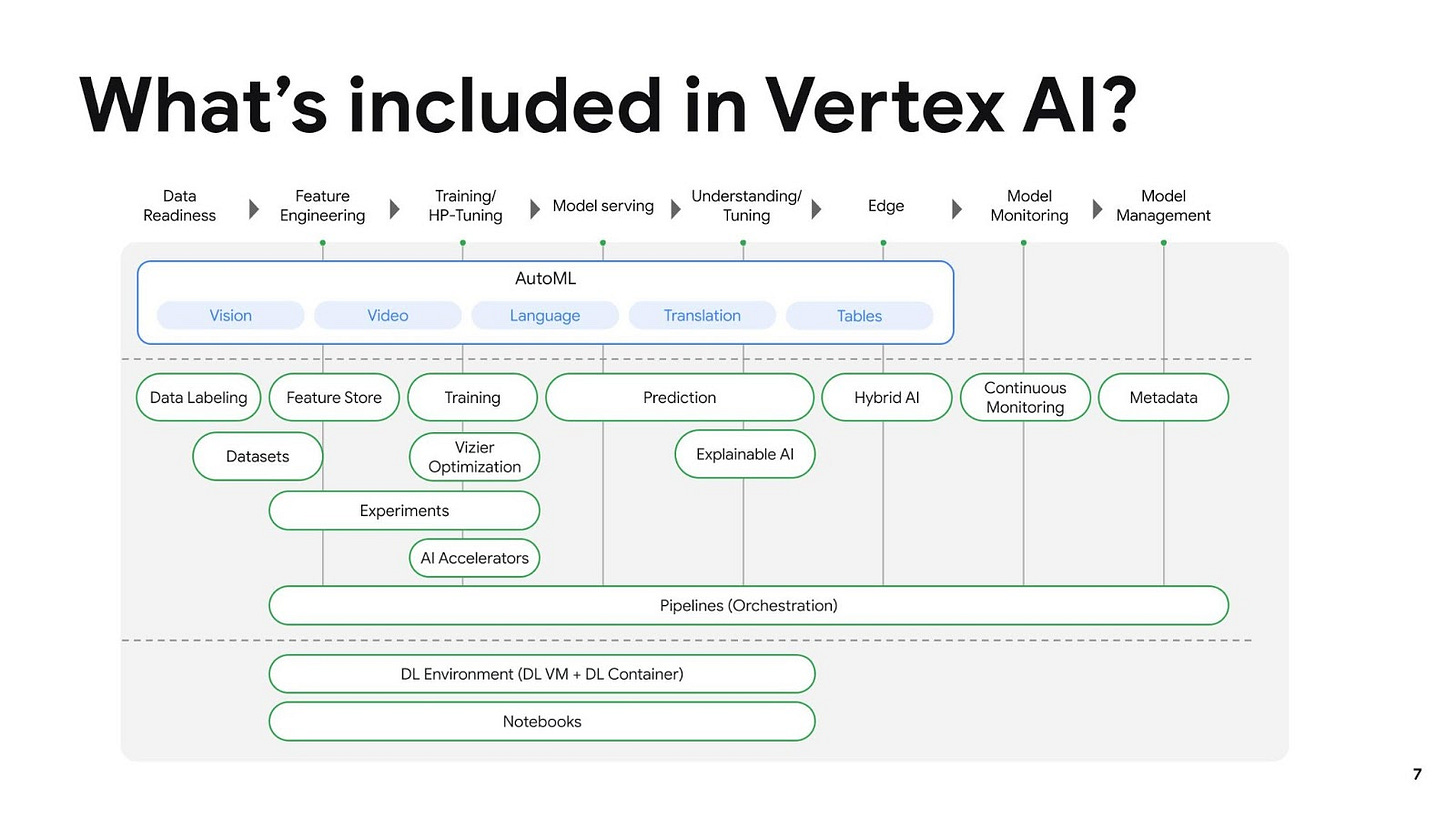

Official VertexAI documentation on Google Cloud provides a good introduction to Vertex AI feature sets, pricing, etc and we recommend reading it if you are considering building new ML models or migrating your ML models to the cloud. We share some key highlights below:

Training with minimal code: With the help of AutoML ML engineers and data scientists can build models in less time. Additionally, companies can take advantage of a centrally managed registry for all datasets across data types (vision, natural language, and structured data).

Data quality: Vertex Data Labeling is a data labeling service to generate custom labels for collecting data to train models.

Support for open source ML frameworks: Vertex AI integrates with frameworks such as TensorFlow, PyTorch, and scikit-learn via custom containers for training and prediction.

MLOps: With services like Vertex Pipelines (for continuous model monitoring) and Vertex Feature Store (for serving and sharing ML features across models) companies can deploy and maintain ML models in production.

GCP has had support for most of the features announced as part of VertexAI even earlier (e.g. AI platform pipelines, AI platform data labeling). In part, this announcement is a rebranding exercise to bring these disparate services under the same umbrella and improve interoperability among them. Overall though, we quite welcome Google’s announcement especially related to MLOps. This suggests to us that model monitoring and maintenance is an important priority for Google Cloud AI.

Paper | Explainable Machine Learning in Deployment

We have discussed explainability in previous issues, such as here when we talked about using k-NNs for explaining and improving model behavior. This week, we cover a paper that explores how organizations use explainability in their day-to-day operations.

What is this Paper?

In this paper, we explore how organizations have deployed local explainability techniques so that we can observe which techniques work best in practice, report on the shortcomings of existing techniques, and recommend paths for future research.

This was done by conducting interviews with fifty people from approximately thirty organizations.

How is Explainability Used Today?

There are different sets of stakeholders who require explainability:

Executives: High-level executives often desire explainability as a goal for their systems, but it can end up being an item to be crossed off for their team.

ML Engineers: These end up using explainability to debug what the model has learned or inspect how it performs for certain data points.

End Users: This is the most intuitive consumer of an explanation (since they are directly impacted by the model predictions) and more explainable models lead to a more transparent product.

Other stakeholders: This could include regulators, domain experts, annotators, etc

Some of the needs being fulfilled by explainability today:

Model debugging: Data scientists want to understand why a model performs poorly on certain inputs, or if adding new features and removing old features would improve performance.

Model monitoring: Teams often want to understand whether drift in certain features would impact their model performance (and knowing feature importance is key).

Model transparency: Explanations increase model transparency, giving end users more trust in the product while satisfying regulations. This can help communicate with business teams and respond to customer complaints.

Model audit: In financial organizations, all deployed ML models must go through an internal audit to satisfy regulatory requirements such as SR 11-7. Explainability can provide guidance on “conceptual soundness” by evaluating the model on multiple data points.

Takeaways and Concerns

The judgment of domain experts (ie labels from experts) is still considered ground truth. Explanation-based methods have a long way to go -- these can suffer from spurious correlations (something we covered previously here) and causal understanding remains challenging.

ML Engineers mostly use explainability techniques to:

identify and reconcile inconsistencies between the model’s explanations and their intuition or that of domain experts, rather than for directly providing explanations to end users.

There are other technical challenges -- computation of explanations can be slow (exponential in input dimensions, in the case of Shapley values), it can be tricky to produce feasible counterfactual explanations and certain explanations can lead to privacy risks (by exposing details about the model behavior or training data).

Finally, being a new discipline, organizations are yet to figure out the right frameworks for deciding how they’re going to use explainability, who is it useful for, and when. We expect to see continued research in this field and will continue to cover stories of adoption when we can.

Regulation | The Algorithmic Justice and Online Platform Transparency Act of 2021

Senator Edward J. Markey and Congresswoman Doris Matsui recently introduced the Algorithmic Justice and Online Platform Transparency Act of 2021, a copy of which can be found here. The legislation specifically relates to algorithms used by companies like Facebook and Twitter to determine which content and advertisements to show to users. Congresswoman Doris Matsui noted in the above article:

“For far too many Americans, long-held biases and systemic injustices contained within certain algorithms are perpetuating inequalities and barriers to access. The Algorithmic Justice and Online Platform Transparency Act is an essential roadmap for digital justice to move us forward on the path to online equity and stop these discriminatory practices. I look forward to working with Senator Markey and urge all of my colleagues to join us in this effort.”

Highlights

Key highlights of the legislation can be categorized into two buckets. It should be noted that in the bill and the highlights described below, the term “algorithm” is really more like a machine learning model (or ensemble of models) combined typically with some business rules that typically constitute large-scale data products such as search engines, news feeds, etc.

(1) Preventing Harm to Users:

Prohibit platforms from using algorithmic that discriminate on the basis of protected categories such as race, age, and gender

Platforms may not employ algorithms if they fail to take reasonable steps to ensure these algorithms achieve their intended purposes.

Create an inter-agency task force in the government to investigate potential discriminatory algorithms used online.

(2) Transparency:

Platforms will be required to explain to users how they use algorithms and what data is used to power them.

Platforms will be required to maintain details of how they build algorithms for review by the FTC.

Platforms will be required to publish annual reports of their content moderation practices.

Connection to Section 230

As noted in this article, this legislation is a fresh approach to bringing more accountability in platforms’ policies and processes around content moderation by not touching Section 230. While there is agreement across the political spectrum about Section 230 needing reform, the details surrounding the reform have become a contested partisan issue. By not touching or seeking to reform Section 230, this bill might have a better shot at getting support across the aisle.

Anthropic: An effort to build reliable, interpretable and steerable AI systems

Anthropic, an AI safety research company, recently came out of stealth with an announcement about their mission & funding (a $124M Series A!!).

Problem

Large AI systems today can also be unpredictable, unreliable, and opaque. As stated in the announcement above, Anthropic’s goal is to “make progress on these issues”, primarily focusing on research. Some areas that are within the scope of their research include natural language understanding, reinforcement learning, and interpretability.

Team

While the announcement was light on Anthropic’s research and product plans, they have a stellar team of scientists and engineers many of whom have worked at OpenAI and Google Brain previously on initiatives like GPT-3, Circuit-Based Interpretability, Multimodal Neurons, and Scaling Laws. We wish the team success on this journey and look forward to covering their research in the coming months.

Learn about Explainability

Here’s a great compilation of resources on explainability from Hima Lakkaraju, Assistant Professor at Harvard University.

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup (open to DMs as well) or email us at mlmonitoringnews@gmail.com

If you like what we are doing please tell your friends and colleagues to spread the word.