Issue #27: Medical Imaging Challenges. Machine Unlearning. Managing Supply and Demand. AI Sandbox.

Welcome to the 27th issue of the MLOps newsletter. It is officially one year since we started writing this newsletter, and we are incredibly grateful for your support. We are excited for the next year to come! 🎉

In this issue, we cover a paper on the machine learning challenges in medical imaging, discuss interesting research regarding machine unlearning, share Doordash’s strategies for matching supply and demand, and much more.

Thank you for subscribing. If you find this newsletter interesting, tell a few friends and support this project ❤️

Paper | How I failed machine learning in medical imaging - shortcomings, and recommendations

This is a fascinating paper that highlights some of the challenges when applying machine learning in the world of medical imaging.

As the authors put it:

there is a staggering amount of research on machine learning for medical images, as many recent surveys show. This growth does not inherently lead to clinical progress. The higher volume of research can be aligned with the academic incentives rather than the needs of clinicians and patients. As an example, there can be an oversupply of papers showing state-of-the-art performance on benchmark data, but no practical improvement for the clinical problem.

It’s Not All About Larger Datasets

Large labeled datasets have solved many problems in computer vision. However, very few clinical questions can be easily posed as discrimination tasks, and even when they do, larger datasets can often fail to “solve” such questions.

An example is that of early diagnosis of Alzheimer’s Disease. Even with a lot of effort and collection of lots of data:

the increase in data size did not come with better diagnostic accuracy, in particular for the most clinically-relevant question, distinguishing pathological versus stable evolution for patients with symptoms of prodromal Alzheimer’s. Rather, studies with larger sample sizes tend to report worse prediction accuracy.

Datasets Reflect An Application Only Partly

Available datasets only partially reflect the clinical situation for a particular medical condition, leading to dataset bias... Dataset bias occurs when the data used to build the decision model (the training data), has a different distribution than the data representing the population on which it should be applied (the test data).

There are many sources of dataset bias; from a cohort not representing the range of possible patients, to imaging procedures introducing biases. A particularly harmful bias is when spurious correlations can appear in clinical images, for example, when dermatologists place a mark next to lesions. Labeling errors can also introduce biases. Expert human annotators may give different labels with systematic biases, but multiple annotators are seldom available.

Metrics That Do Not Reflect What We Want

Suitable metrics for reporting performance on a dataset can change over time, and important metrics, such as calibration, can be missing from research. Also, metrics that are used may not be synonymous with practical improvement.

Similarly, when comparing such metrics to baseline, baselines may be poorly chosen. For example, choosing an underpowered baseline may create an “illusion of progress”. The opposite problem, of not reporting a simple problem that would have been effective on a dataset is also leaving our critical information.

Finally, the evaluation error on a dataset might be higher than the performance gains, even as more and more effort is spent on diminishing returns. More Than Beating The Benchmark

Good machine-learning benchmarks are more difficult than they may seem...we want to look at more than just outperforming the benchmark, even if this is done with proper validation and statistical significance testing. One point of view is that rejecting a null is not sufficient, and that a method should be accepted based on evidence that it brings a sizable improvement upon the existing solutions.

Conclusions

This paper was a great (although not the most cheerful) read. It’s important to keep in mind some of the challenges with machine learning research, especially in complex domains like medical imaging. We remain super excited about what ML technology can bring to us in this discipline!

Wired | Now That Machines Can Learn, Can They Unlearn?

What is Machine Unlearning?

Machine Unlearning is a new area of research that aims to make models selectively “forget” specific data points it was trained on, along with the “learning” derived from it i.e. the influence of these training instances on model parameters. The goal is to remove all traces of a particular data point from a machine learning system, without affecting the aggregate model performance.

Why is it important?

Work is motivated in this area, in part by growing concerns around privacy and regulations like GDPR and the “Right to be Forgotten”. While multiple companies today allow users to request their private data be deleted, there is no way to request that all context learned by algorithms from this data be deleted as well. Furthermore, as we have covered previously, ML models suffer from information leakage and machine unlearning can be an important lever to combat this.

In our view, there is another important reason why machine unlearning is important: it can help make recurring model training more efficient by making models forget those training examples that are outdated, or no longer matter.

Initial Explorations

This paper by researchers from the universities of Toronto and Wisconsin-Madison introduces a framework to expedite the unlearning process by limiting the influence of a data point in the training procedure.

A more recent paper by researchers at Cornell, University of Waterloo, and Google studies the generalizability of various machine unlearning approaches. It also proposes a new unlearning algorithm that improves “deletion capacity” (fraction of the training dataset that can be deleted with a bounded loss to performance) for convex loss functions.

This is a new area of research. The article highlights a couple of papers that have been published recently around this (that we shared above). Our general take is that we need a lot more exploration and rigorous testing before concluding the real-world applicability of machine unlearning.

Doordash Engineering Blog | Managing Supply and Demand Balance Through Machine Learning

Doordash recently shared their approach to managing supply and demand balance on their platform with machine learning. The article does a good job of explaining the problem, challenges, and their approach and we recommend reading it entirely. We highlight some salient takeaways below.

Delivery-level vs Market-level balance

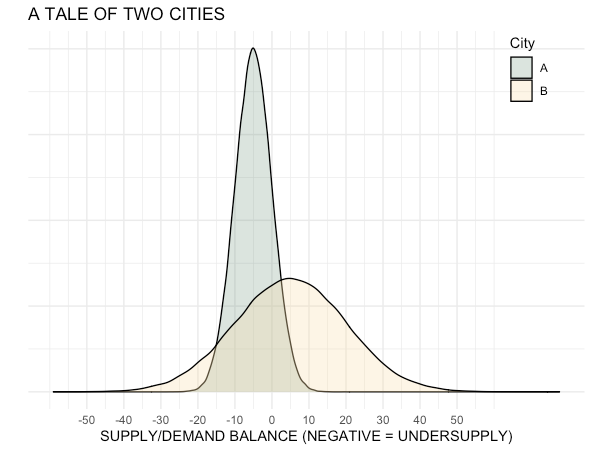

The goal of the system is to balance supply (of Dashers) and demand (of delivery orders). But at what granularity should this balance be optimized (and measured)? The ideal scenario would be to have the system balance supply and demand at a delivery/order level - i.e. every order has a Dasher available at the most optimal time, and every Dasher meets or exceeds their targeted pay per hour. However, a more tractable problem is for the system to balance at the market level i.e. ensure that as many Dashers as are approximately necessary and sufficient to meet aggregate order demand but each delivery/Dasher’s outcome might be less than the optimal.

Problem Formulation

Forecasting: As shared in the article, the primary metric used to measure this is “number of delivery hours” i.e. the system tries to balance the number of Dasher-hours required to make deliveries while keeping delivery time low and Dasher utilization high. In this way, the demand forecasting problem is mapped to a regression problem. As highlighted in the article, the team used gradient boosting as the underlying model architecture, through the LightGBM framework.

Optimization: Predictions from the forecasting ML model are fed into a Mixed-integer programming (MIP) optimizer whose objective is to decide incentive rewards to minimize undersupply of Dashers subject to certain configurable constraints.

Reliability Considerations

The article shares some interesting and valuable learnings on tradeoffs between model performance and reliability. Their forecasting model, for example, relies on a minimal set of features by design and it reduces the complexity of ETL pipelines and the likelihood of feature drift, improving transparency:

We could encode hundreds of features to build a model that has high performance. Although that choice is very appealing and it does help with creating a model that performs better than one that has a simple data pipeline, in practice it creates a system that lacks reliability and generates a high surface area for feature drift

Another point highlighted in the article is around long-chain ETL dependencies, something that we have observed in our own work experiences. ETL jobs can fail or be delayed for any number of reasons. When inputs to your models are a final result of a long chain of such dependencies, it can become very unreliable due to increased surface area for failures. Designing your input feature computations to have minimal intermediate dependencies can go a long way to improve reliability.

Emerging Tech Brew Blog | To regulate AI, try playing in a sandbox

The last couple of years have been especially news-filled on the AI regulation front.

Last May, Norway announced plans to create an AI regulatory sandbox, and the recently proposed EU legislation mentions the word “sandbox” 38 times. The goal of such regulatory sandboxes is to:

…allow organizations to develop and test new technologies in a low-stakes, monitored environment before rolling them out to the general public.

What happened?

After Norway’s data protection authority, Datatilsynet, announced plans to create an AI regulatory sandbox, it accepted four participants (from both the public and private sector) to work in 3-6 month-long engagements with them. These participants were facing regulatory conundrums such as “applying principles like transparency, fairness, and data minimization to AI systems”.

Generally, the goal of Norway’s AI regulatory sandbox is to facilitate compliance with some of these trickier provisions of the GDPR. It’s not looking to create a process that all tech developers go through, but rather to produce helpful precedent in fuzzy legal areas, and communicate those findings to organizations building AI systems. Its approach relies on a series of hands-on workshops and extended conversation and negotiation between tech developers and regulators.

Our Thoughts

These are still very early days for both the usage of AI in industry and regulating such technology. As different countries (and regions such as the EU) try different strategies to tackle the very real risk that AI systems pose, we will get to learn from the approaches that deliver the best results.

Snowflake | Unstructured Data Support

Snowflake recently announced support for unstructured data - now users can access, share, load, and process unstructured files of data in Snowflake.

First previewed at the Snowflake Summit earlier this year as being in ‘private preview’, you can read more about it in this documentation. The release notes and documentation are a little bit light on what’s possible to do with unstructured data beyond storing and accessing this data in Snowflake, but there is an upcoming webinar on September 22 (signup link) that might be relevant if you wish to learn more about this.

Github | Python Package for Online ML

River looks like a neat Python library for online machine learning. As they put it:

River's ambition is to be the go-to library for doing machine learning on streaming data.

Machine learning is often done in a batch setting, whereby a model is fitted to a dataset in one go. This results in a static model which has to be retrained in order to learn from new data… With River, we encourage a different approach, which is to continuously learn a stream of data. This means that the model process one observation at a time, and can therefore be updated on the fly. This allows to learn from massive datasets that don't fit in main memory.

You can learn more by reading this paper or by watching this video.

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup or email us at mlmonitoringnews@gmail.com. If you like what we are doing please tell your friends and colleagues to spread the word.