Issue #28: MAD Landscape. Covid-19 Border Testing. Blocking Spam@Slack. Applying ML. Scikit-learn 1.0.

Welcome to the 28th issue of the MLOps newsletter. We really enjoyed writing this one, hope you enjoy it too!

In this issue, we briefly cover Nihit’s interview with Eugene Yan, discuss Matt Turck’s ML, AI, and Data landscape, share a cool ML-based invite spam detection from Slack, and dive into a fascinating reinforcement learning system for COVID testing.

Thank you for subscribing. If you find this newsletter interesting, tell a few friends and support this project ❤️

Eugene Yan | Applying ML

Nihit: I recently did an interview with Eugene Yan as part of his ApplyingML initiative (which is a fantastic collection of resources - papers, tools, playbooks, mentor interviews - for building machine learning systems). In the interview, I share my thoughts on the machine learning infra and tooling landscape, and my experience building ML systems at Facebook. You can check out the transcript here (or read about interviews with other mentors)

As Eugene put it, his main motivation is to collect “ghost knowledge” that resides within the community but is seldom officially documented:

Knowledge that is present somewhere in the epistemic community, and is perhaps readily accessible to some central member of that community, but it is not really written down anywhere and it's not clear how to access it.

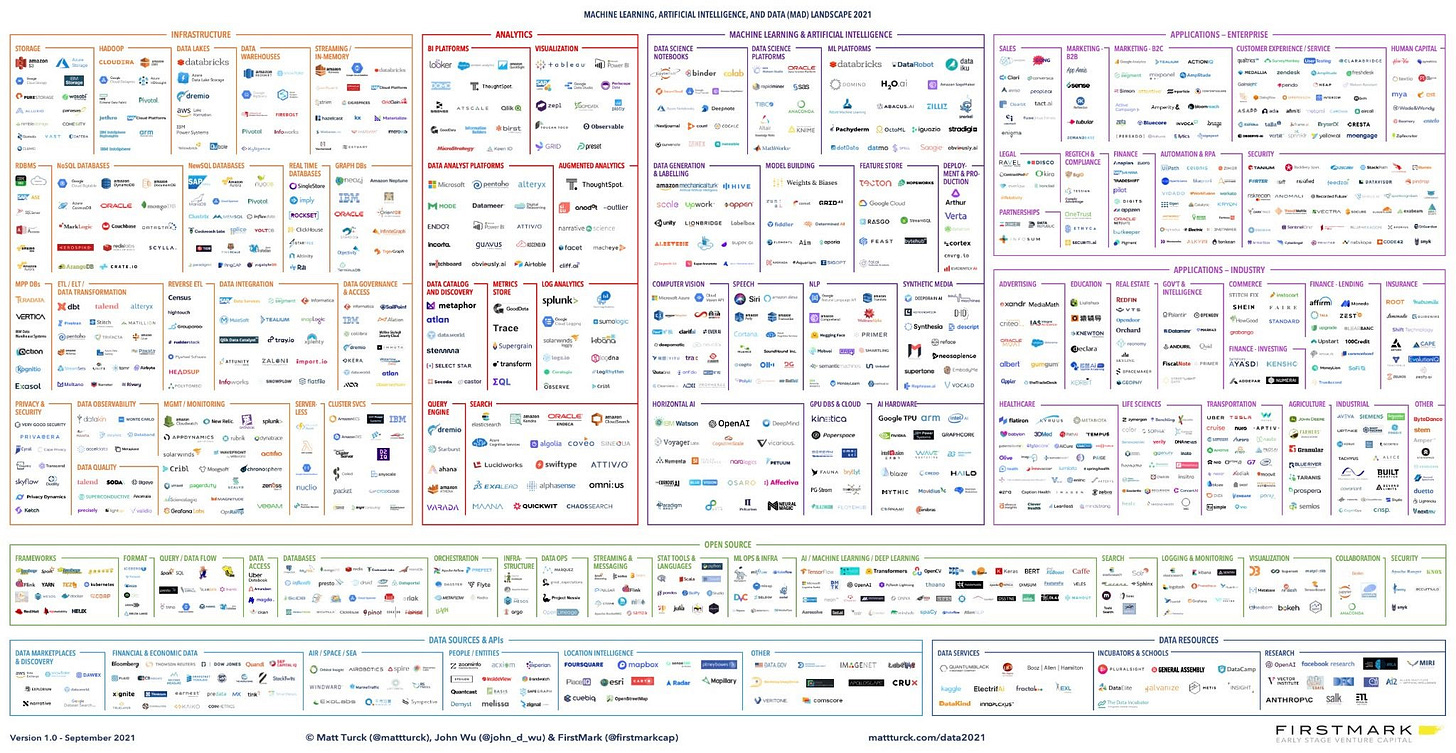

Matt Turck | Red Hot: The 2021 Machine Learning, AI and Data (MAD) Landscape

The Data Landscape (now the MAD Landscape) post from Matt Turck is a great read every year. We covered the 2020 post last year, and it was fun to look back on it and compare it to 2021.

How has this last year been?

As the authors write:

It’s been a hot, hot year in the world of data, machine learning and AI.

This is easily evidenced by the denser landscape chart (and our coverage of the ecosystem over the past year) but also observed by the recent, successful IPOs in the space (Snowflake, Confluent, UIPath, C3.ai, Sentinel One) and the increased funding for AI startups ($38B in the first half of 2021 compared to $36B in all of 2020).

We have seen tremendous growth in the number of startups and tools, leading to a dizzying set of options for companies and practitioners (which is mostly good news). As the authors put it:

"...we believe that companies will continue to work with multiple vendors, platforms and tools, in whichever combination best suits their needs.

The key reason: the pace of innovation is just too explosive in the space for things to remain static for too long. Founders launch new startups, Big Tech companies create internal data/AI tools and then open source them, and for every established technology or product, a new one seems to emerge weekly.

For newer categories such as MLOps, it has also led to a crowded market:

as VCs aggressively invested in emerging sectors up and down the data stack, often betting on future growth over existing commercial traction, some categories went from nascent to crowded very rapidly – reverse ETL, data quality, data catalogs, data annotation and MLOps.

2021 for MLOps

It’s rare that we get to quote ourselves, but here was one of our predictions from last year:

we believe there will be many more categories (which will be much more crowded) in the coming years.

That’s certainly proven to be true. This year, we saw new categories for:

Feature Stores

Model Building

Deployment and Monitoring

Synthetic Media

Data Quality & Observability

The older categories for Data Generation & Labelling, Data Science Notebooks, Data Science Platforms, ML Platforms, Speech, Computer Vision, and NLP continued to grow in terms of companies. We aren’t going to reference any specific companies here -- there are simply far too many for us to do them justice.

Final Thoughts

There are many interesting and important problems to be solved in the world of data and ML, and the density of talent trying to address these challenges is the most exciting aspect to us!

We definitely recommend giving the entire article a read, especially to get a sense of the broader data ecosystem.

Paper | Efficient and targeted COVID-19 border testing via reinforcement learning

This is a fascinating paper that describes a Reinforcement Learning system deployed at the border of Greece.

What’s the Problem?

Throughout the COVID-19 pandemic, countries relied on a variety of ad-hoc border control protocols to allow for non-essential travel while safeguarding public health: from quarantining all travelers to restricting entry from select nations based on population-level epidemiological metrics such as cases, deaths or testing positivity rates.

This has been a difficult problem for many countries -- whether to allow travelers in, which passengers to allow in, and what testing requirements to place on them. The circumstances change rapidly; outbreaks can occur in countries much faster than rules and policies can be created. The team built a reinforcement learning system that was deployed across all 40 ports of entry to Greece - from airports to land crossings to seaports.

Without going into details, the system consisted of the following components:

Asking incoming travelers to fill out a form per household containing information about their travel and demographics.

Estimating prevalence of COVID using recent test results across a discrete set of interpretable traveler types (based on country, region, age, and gender)

Allocating a scarce number of tests among the travelers while balancing two objectives: maximize the number of infected asymptomatic travelers identified (exploitation) and better learn their prevalence of COIVD for traveler types it does not currently have precise estimates (exploration)

Conduct tests, and log data as quickly as possible to get better estimates for Step 2

What were the Results?

The results are amazing. The authors compare the system’s performance against counter-factual modeling and found that it identified 1.85 times as many asymptomatic, infected travelers as random testing (with up to 2-4 times as many during peak travel), and 1.25-1.45 times as many asymptomatic, infected travelers as testing policies that only utilized epidemiological metrics.

Lessons from Design and Deployment

Data minimization: Designing the algorithm with the philosophy of requiring minimal data about travelers, in order to comply with GDPR (even when there is a “tradeoff between privacy and effectiveness”)

Prioritize interpretability: For example, they used empirical Bayes to communicate that large confidence intervals suggest higher risk. Similarly, their usage of gittins indices to provide a simple metric of risk for a traveler type made intuitive decisions easier.

Design for flexibility: The system required substantial financial and technical investment, and it needed to be flexible to accommodate unexpected changes. For example, they were able to quickly define new traveler types when vaccine distribution started without altering any other components.

Our Thoughts

Problems such as this are fraught with complications of bias, data privacy, and other real-world concerns. We have covered extensively when ML goes wrong (see our coverage of ML + Covid here and here), but this is a fantastic example of how we can build better systems when ML is deployed appropriately.

Some more reactions to this work:

Slack Engineering Blog | Blocking Slack Invite Spam With Machine Learning

In this article, Aaron Maurer shares some learnings and insights from building invite spam detection models at Slack. We especially liked the journey (no spam detection → rules based → ML model-based) shared in this article and is something that many teams and companies will find relatable as they go through the ML adoption maturity journey.

Problem

One of the first things you do after creating a new Slack team is to invite people from your community/research group/company etc to join. This is done by entering emails of people you’d like to invite, and then Slack will send out invites on your behalf. However, in some cases, spammers abuse this system to send out spam invite emails.

Rule-based spam detection

As with many real-world ML applications, Slack’s early versions of detecting and enforcing invite-based spam were rules-based, based on attributes like IP addresses, the occurrence of certain phrases or words in the invite matches, etc. As can be expected, this worked reasonably well in preventing spam invites but was labor-intensive (to have to create new rules on an ongoing basis) and the rules created many false positives.

Model-based spam detection

Feature set: The team used the existing feature set that was used in the hand-tuned rules. This is quite intuitive for most ML applications that are trying to replace hand-tuned rules: oftentimes, the rules (even though they are noisy) can serve as a good set of features for your first model

Labels: As highlighted in the post, getting ground truth labels (“is the invite a spam or not”) is not available for all invite emails. Even though it would be possible to get these labels via human review, there is a time and money cost to it. The team instead chose to train the model on a proxy observation: whether an invite would be accepted or not within 4 days (90% of invites are accepted in that timeframe)

Model: A sparse logistic regression model, that outputs a score proportional to the probability that the invite is spammy.

Online serving: Slack has an in-house service for serving model predictions. To serve predictions from a machine learning model online, an ML engineer would implement a lightweight Python class. The service deploys it as a microservice through Kubernetes, which can be queried from the rest of our tech stack

Impact

As outlined in the post, the switch to model-based detection resulted in a big decrease in false positives, while maintaining high recall:

The machine learning model was much better at preventing false positives. Only 3% of the invites it flagged ended up being accepted when allowed through, while around 70% of the invites flagged by the old model actually ended up being accepted

Towards Data Science | The 6-Minute Guide to Scikit-learn’s Version 1.0 Changes 😎

Scikit-learn, the widely used Python machine learning library, recently released version 1.0. This article by Jeff Hale highlights the changes, including bug fixes, new features, and API cleanups. We summarize the key takeaways below:

OneHotEncoder: This feature preprocessing step now supports handle_unknown='ignore' to accept values it hasn’t seen before (especially helpful for using in production where it can encounter values it does not have encoding for), and dropping categories within a feature that you might want to ignore.

Pandas: When passing a dataframe as input to a transformer, it stores the feature columns in feature_names_in_. Additionally, get_feature_names_out has been added to the Transformer API to return names of output features.

SGDOneClassSVM: This new class is an SGD implementation of one-class SVM (commonly used for outlier or out-of-distribution detections).

Normalization in Linear models: The “normalize” option in linear_model.LinearRegression is deprecated and will be removed in version 1.2. This behavior can now be reproduced with a sklearn pipeline that stitches together the StandardScaler and the linear model.

Sklearn metrics: Several metrics such as ConfusionMatrixDisplay and PrecisionRecallDisplay now expose two class methods from_estimator and from_predictions allowing to create a confusion matrix plot using an estimator or the predictions. Equivalent methods such as metrics.plot_confusion_matrix and metrics.plot_precision_recall_curve are deprecated and will be removed in version 1.2

Twitter | Lessons for Recommender Systems

In this Twitter thread, Karl Higley shares some great insights about building large-scale recommendation systems. Worth clicking and reading through it!

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup or email us at mlmonitoringnews@gmail.com.

If you like what we are doing, please tell your friends and colleagues to spread the word. ❤️