Issue #29: State of AI. Kaggle ML Survey. ML Deployment at Reddit. Inferentia.

Welcome to the 29th issue of the MLOps newsletter. This one is going to be fairly image-rich - hope you enjoy it! 🖼️

In this issue, we briefly cover the latest State of AI Report, look into the key trends from Kaggle’s Data Science and ML survey, explore Reddit’s model deployment architecture, deep dive into a paper on augmenting human annotations in microscopy, and much more.

Thank you for subscribing. If you find this newsletter interesting, tell a few friends and support this project ❤️

State of AI Report 2021

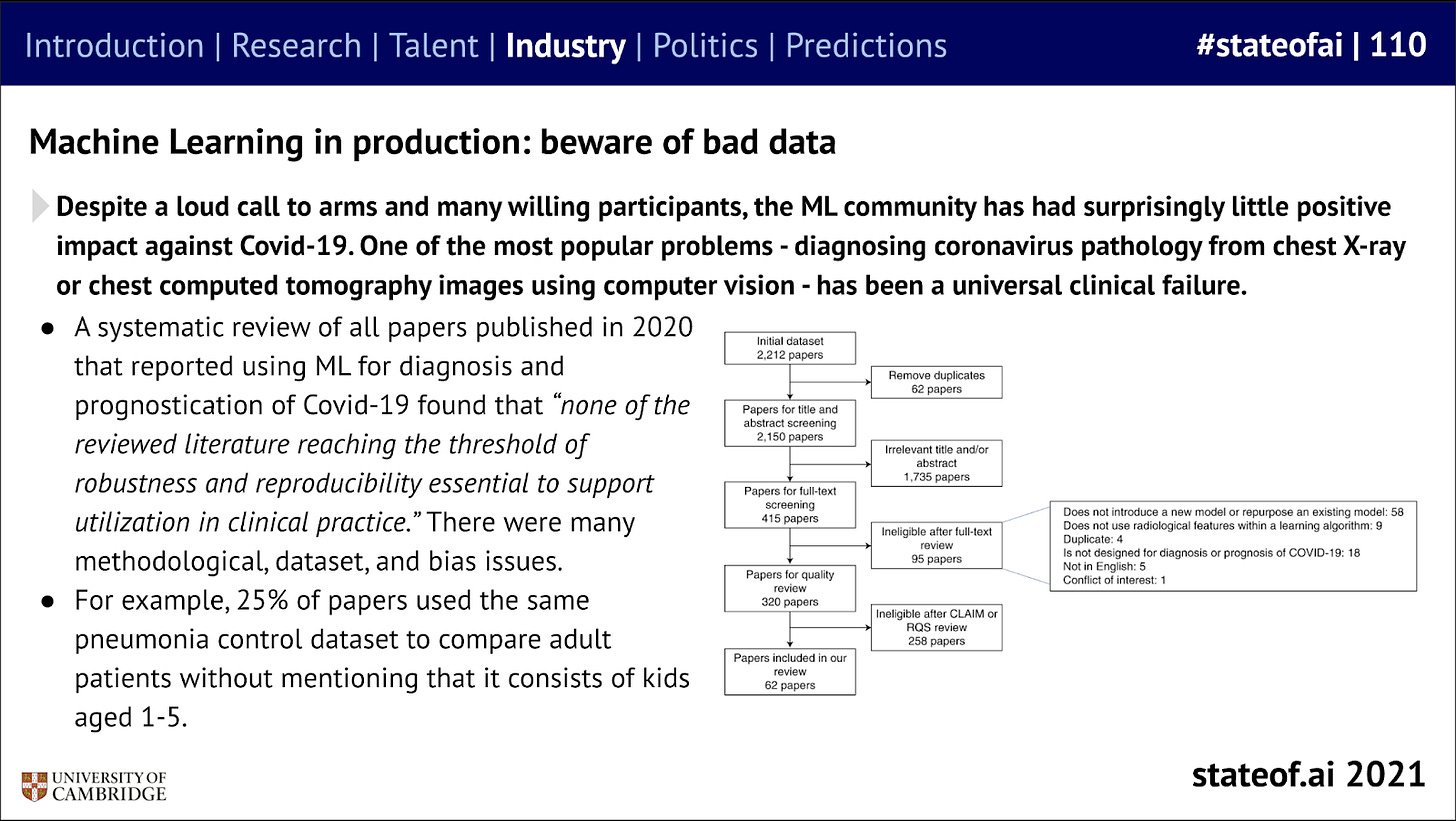

We covered the State of AI Report 2020 last year here and the authors are back with their latest analysis of the most interesting developments in AI. It’s a whopping 188 slides and ~5 hours of reading and digesting, but probably worth the effort. While it’s broken up into sections on research, talent, industry, politics, and predictions, we will only focus on the slides on AI in industry.

It’s nice to see that the lessons from ML in production have made it back into the world of research -- recently, one of the topics we have covered a lot is the move towards data-centric AI.

Similarly, changes in distribution between training and prediction-time is a common topic in industry, and it was interesting to see the WILDS benchmark featured here.

A bunch of other topics that we have covered before made an appearance-- dynamic benchmarking (and Dynabench in particular) to quickly improve models, underspecification when the same model with different random seeds behave differently, and pervasive “bad data” issues (highlighted in the research around ML for Covid).

We loved the stories of applied ML; from Ocado’s deep learning being used for 98% of stock replenishment decisions for online grocers to Viz.ai’s stroke detection software helping 1 patient every 47 seconds in the US, to reinforcement learning used at the Greek border (another story we covered here) for Covid testing, to computer vision aiding in disaster relief, transformer models being used to accurately forecast electricity demand and so much more!

There is a lot in here that we can’t fully do justice to -- check out the slides or the Twitter thread from one of the authors:

Kaggle | 2021 Data Science and Machine Learning Survey

Kaggle recently shared results from their annual survey of data scientists and machine learning engineers. The survey focuses on trends in the data science landscape in industry. It is quite insightful and we recommend reading it in its entirety. A few key takeaways for us:

Continuing democratization of Data Science: Advanced degrees (Masters or higher) are still the norm (>60% of respondents) but going into data science after a bachelor's degree is becoming increasingly more common (~35% of respondents vs only 20% in 2018). Only 12.2% of survey respondents reside in the US and 24.4% in India, followed by a long tail.

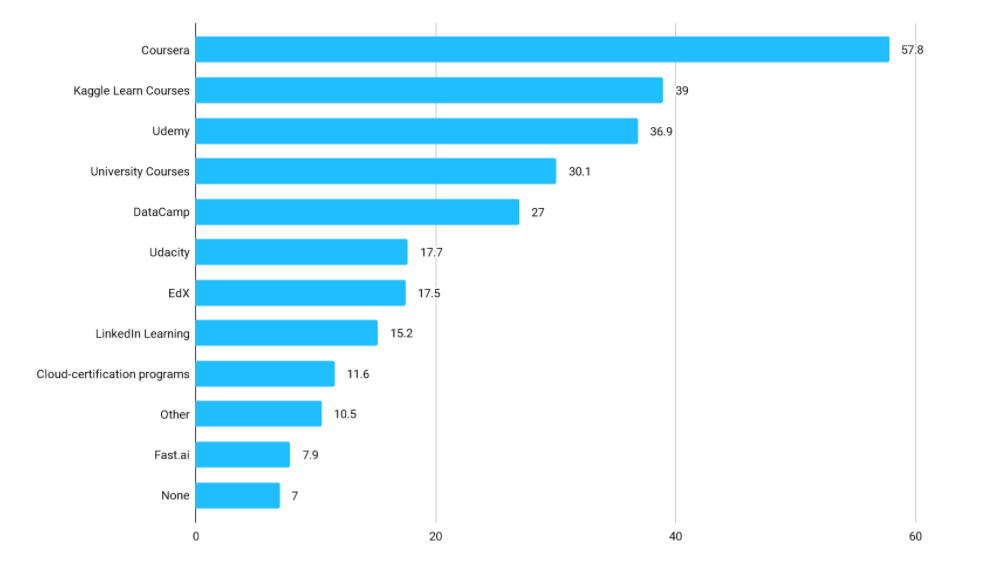

Online learning continues to be popular: Given the rapidly progressing landscape, a large number of data scientists use one or more online learning resources to stay up to date. Coursera (57.8%) and Kaggle (39%) remain the most popular online learning destinations.

Algorithms and Model architectures: Strong year-over-year growth in the use of large complex models such as transformers, but linear models (linear regression, logistic regression) and decision trees are still among the most widely used and deployed ML models.

Machine Learning Frameworks: It’s all Python. Scikit-learn is still the most widely used framework for building and deploying ML models (>80% of respondents). Pytorch is continuing to grow in popularity (>33% of respondents).

ML experimentation tools: Repeatable and well-documented experimentation tracking has received a lot of attention in the ML community. But according to the survey, >57% of respondents do not use any ML experimentation tools. This shows that we are in the early innings of ML tooling ecosystem maturity.

AutoML usage growing steadily: AutoML is becoming an increasingly common part of the ML development lifecycle. Google Cloud AutoML is the most widely used AutoML framework at 23.4% of respondents.

Reddit Engineering Blog | Evolving Reddit’s ML Model Deployment and Serving Architecture

Problem

Machine Learning powers a lot of the product experiences on Reddit - from the personalization of content feed for users, to optimization of push notifications, content moderation, and more. ML systems at Reddit serve thousands of inference requests every second. The engineering team at Reddit undertook a redesign of the production ML system to keep up with usage growth and help with scalability, and this article shares the goals and details of this effort.

Legacy ML Stack

Reddit’s legacy ML stack was based on baseplate.py (Reddit’s python web services framework): Machine learning models were deployed as model classes inside of their application service called Minsky. When launching a new model, a machine learning engineer would typically end up touching the application code to download the new model upon application start, load it into memory and implement the feature transformation and inference logic for serving inference traffic.

As you might have noticed, this process has several limitations that are highlighted in the article. One that especially stands out is that there is a tight coupling across models: if a single model encounters an exception the entire service instance will crash. Moreover, this coupling between the model inference and application layer means limited ability to monitor online model characteristics.

Gazette Inference Service

The team at Reddit solved some of the limitations of previous architecture with the Gazette Inference Service. This is a dedicated service for online model inference with a single endpoint: Predict. The request simply needs to specify the model, version, and how to fetch features that go into the model. The service is dockerized and deployed with Kubernetes. While it currently supports Tensorflow models, they are working on expanding support to other frameworks.

The article highlights how Gazette overcomes the limitations of the previous setup, especially scalability and isolation between different models. In general, this progression of locally coupled models in the application layer → dedicated inference service sounds quite familiar. We look forward to future learnings and improvements shared by the team as they build out Gazette.

Paper | Biological data annotation via a human-augmenting AI-based labeling system

This is an interesting paper that showcases some of the challenges in acquiring high-quality annotations in domains where large datasets can be generated easily, but the right tools for annotating don’t currently exist.

Background

Supervised learning—in which computational models are trained using data points (e.g., histopathology image; raw microscopy image) and data annotations (e.g., “cancerous” vs “benign”; stained microscopy image)—have been central to the success of CV in biology. Biologists have the distinct advantage of being able to generate massive amounts of data—a single microscopy image can yield a gigabyte of visual data for algorithms to learn from. A disadvantage, however, is the difficulty and cost of obtaining complete annotations for datasets.

While practitioners use computational techniques for augmenting data (rotations, distortions, changes in color), these are often a poor substitute for human-quality annotations. This led to the authors presenting a human-augmenting AI-based labeling system, or HALS.

What is it?

HAL provides a data annotation interface with three deep learning models (segmentation model, classifier model, and an active learner) that work in tandem to:

learn the labels provided by an annotator

provide recommendations to that annotator designed to increase their speed, and

determine the next best data to label to increase the overall quality of annotations while minimizing total labeling burden.

Every microscopy image (these are very large images) is first pre-processed by running it through the segmentation model, and then as human annotators start providing labels for small regions, the classifier starts to learn a model for the regions being labeled. After a small number of labels have been acquired for each class, the classifier starts to suggest labels for regions that annotators haven’t seen yet. The annotator can choose to accept or reject such proposed labels. Simultaneously, the active learner breaks the entire image into square patches, converts them into feature vectors, and recommended new regions for the annotator to look at.

If you’re interested in further details on the specific datasets, model pretraining, active learning algorithm used, etc, we recommend going through the paper.

Results

Using four highly repetitive binary use-cases across two stain types, and working with expert pathologist annotators, we demonstrate a 90.6% average labeling workload reduction and a 4.34% average improvement in labeling effectiveness.

“Workload reduction” has an interesting definition -- the fraction of AI-generated labels that were accepted immediately by the annotators, and it would be interesting to explore the actual time saved (something the authors do mention in the paper as well).

“Labeling effectiveness” was measured by the AUC score on a validation set, and it was nice to see a modest bump in the true performance of the final ML model as well.

New Resources for Machine Learning

MAD Podcast

We recommend checking out the MAD Podcast (Machine Learning, AI, and Data), co-hosted by Michael Harper and Honor Chan, featuring industry experts and with a focus on data quality.

Embeddinghub by Featureform

Embeddings (dense vector representations) are a fundamental building block for Machine Learning. Embeddinghub is an open-source database for storing and searching embeddings built by FeatureForm. EmbeddingHub uses RocksDB to durably store embeddings and metadata, and HNSWLib to build approximate nearest neighbor indices. Additionally, it supports versioning and rollbacks for embeddings which can be very useful for production applications. You can learn more about the project on Github.

Twitter | Inferentia chips

Sudeep Pillai has a great thread testing the AWS Inferentia chips, custom-designed for ML inference. He finds that many ResNet and Transformer style models have pretty good performance, with some models returning sub-10-ms inference latencies. We’ll look forward to reading a more detailed post on this in the future!

Thanks

Thanks for making it to the end of the newsletter! This has been curated by Nihit Desai and Rishabh Bhargava. If you have suggestions for what we should be covering in this newsletter, tweet us @mlopsroundup or email us at mlmonitoringnews@gmail.com.

If you like what we are doing, please tell your friends and colleagues to spread the word. ❤️